Difference between revisions of "Randomized Evaluations: Principles of Study Design"

| (7 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

Randomized evaluations are field experiments involving the assignment of subjects | Randomized evaluations are field experiments involving the assignment of subjects [[Randomization|randomly]] to one of two groups: the '''treatment group''' which receives the policy intervention being evaluated and the '''control group''' which remains untreated. | ||

For the purpose of this article, we will rely on the example of a study designed to evaluate the | For the purpose of this article, we will rely on the example of a study designed to evaluate the effects of cash transfers on farm outputs. In this setup, members of the '''treatment group''' will receive cash transfers in addition to an information pamphlet related to cropping systems like inter-cropping, while the control group will only receive the pamphlet. | ||

The results of such a trial are then used to answer questions about the effectiveness of an intervention, and can prevent inefficient allocation of resources to programs that might prove to be ineffective after a study on a small-scale. | |||

==Read First== | ==Read First== | ||

*This section covers the key principles of study design to guide researchers on best-practices in conducting field evaluations. For more about evaluations, and their types, please see [[Experimental Methods|experimental methods]]. | *This section covers the key principles of study design to guide researchers on best-practices in conducting field evaluations. For more about evaluations, and their types, please see [[Experimental Methods|experimental methods]] and [[Quasi-Experimental Methods|quasi-experimental methods]]. | ||

* There can be various biases that can affect results of an experiment, such as [[Selection Bias | selection bias]], or [[Recall Bias|recall bias]]. | * There can be various biases that can affect results of an experiment, such as [[Selection Bias | selection bias]], or [[Recall Bias|recall bias]]. | ||

*We will also look at ways of tackling these through efficient study design. | *We will also look at ways of tackling these through efficient study design. | ||

==Step 1: Comprehensive protocol for the evaluation== | ==Step 1: Comprehensive protocol for the evaluation== | ||

The first step involves selecting a hypothesis (assumption) that specifies the anticipated link between the predictor variables and the outcomes, that is, the | The first step involves selecting a hypothesis (assumption) that specifies the anticipated link between the predictor '''variables''' and the outcomes, that is, the null hypothesis. | ||

In the cash transfer experiment mentioned at the beginning, this would involve laying out the hypothesis that cash transfers do indeed have an effect on farm output. We would also need to lay out the target population, in terms of geographical coverage, and perhaps an exclusion criteria, limiting the study to only include farmers with annual incomes under $1000. | In the cash transfer experiment mentioned at the beginning, this would involve laying out the hypothesis that cash transfers do indeed have an effect on farm output. We would also need to lay out the target population, in terms of geographical coverage, and perhaps an exclusion criteria, limiting the study to only include farmers with annual incomes under $1000. | ||

===Key Concerns=== | ===Key Concerns=== | ||

*The sample to be studied must be clearly specified, including exclusion | *The [[Sampling|sample]] to be studied must be clearly specified, including exclusion and inclusion criteria. | ||

*Pilot studies can help identify ideal target population, as well as ascertain | *Pilot studies can help identify ideal target population, as well as ascertain take-up rates, that can help with '''sample size''' and [[Power Calculations|power calculations]]. | ||

*The sample size must be selected in a manner that provides a high probability of detecting a | *The '''sample size''' must be selected in a manner that provides a high probability of detecting a [[Minimum Detectable Effect|significant effect]]. | ||

*A good study can be designed by consulting experienced researchers. | *A good study can be designed by consulting experienced researchers. | ||

==Step 2: Randomization== | ==Step 2: Randomization== | ||

Broadly speaking, randomization involves allocating the sample selected (based on calculations in Step 1) into one of two groups: | Broadly speaking, [[Randomization|randomization]] involves allocating the [[Sampling|sample]] selected (based on calculations in Step 1) into one of two groups: '''treatment and '''cotrol groups'''. This is the basis for establishing the '''causal effect''', which is the cornerstone of a '''randomized''' evaluation. See[[Randomization in Stata]] for a technical explanation. | ||

In our cash-transfer study, for instance, this would involve '''randomly''' assigning half, or close to half, of the farmers to the '''treatment''' and '''control groups'''. Alongside this process, we would also need to [[Primary Data Collection|collect baseline data]] on demographics and output in previous period, in order to ensure our '''treatment''' and '''control groups''' are indeed similar in all respects except the intervention. | |||

===Key Concerns=== | ===Key Concerns=== | ||

*Effective randomization is important to tackle the issue of | *Effective [[Randomization|randomization]] is important to tackle the issue of confounding, that is, when a characteristic is associated with the intervention as well as the outcome. For example, if younger people are less likely to experience symptoms of heart disease, and also less likely to visit their doctors for annual check-ups, then an intervention that tries to evaluate the impact of a coronary-health campaign on hospital visits is said to be confounded by age. | ||

*This process must be concealed from the investigator. | *This process must be concealed from the investigator. see also [[Research Ethics]]. | ||

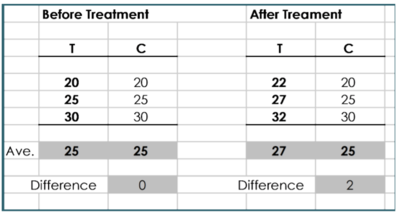

*Initial baseline characteristics must be measured across the two groups, and these should not be | *Initial baseline characteristics must be measured across the two groups, and these should not be significantly different. One solution to this problem is [[Randomization Inference|randomization inference]], a concept that is gaining ground in the field of '''randomized''' evaluations. | ||

*Care must be taken to ensure minimum | *Care must be taken to ensure minimum attrition, that is, dropping out of some subjects after assignment. | ||

*Regardless, outcomes should still be compared against initial members of the control group - this is called | *Regardless, outcomes should still be compared against initial members of the '''control group''' - this is called intention-to-treat. | ||

[[File:Attrition.png|400px|thumb|center|'''Fig.1. Attrition''']] | [[File:Attrition.png|400px|thumb|center|'''Fig.1. Attrition''']] | ||

==Step 3: Intervention, followed by measuring the outcomes== | ==Step 3: Intervention, followed by measuring the outcomes== | ||

The next step is to apply the intervention, and then measure outcomes, called | The next step is to apply the intervention, and then measure outcomes, called endline characteristics, after the pre-determined time-period has passed since the intervention. | ||

In our cash-transfer example, this would involve providing cash transfers to the '''treatment group''' while ensuring that the '''control group''' members do not receive them. | |||

After the intervention timeline is completed, we will [[Primary Data Collection|collect data]] on farm outputs again for the two groups and compare. | |||

===Key Concerns=== | ===Key Concerns=== | ||

*Sufficient time should be given for the intervention to have its intended effect. Premature | *Sufficient time should be given for the intervention to have its intended effect. Premature calculation of outcomes can indirectly affect the [[Power Calculations|power]] of the evaluation by affecting the [[Minimum Detectable Effect|minimum detectable effect size (MDES)]]. | ||

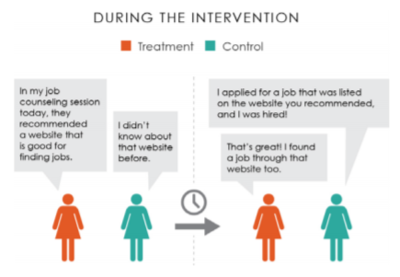

*Blinding of the investigator to the intervention is crucial. It is also important for the subject to be blind to both, the assignment as well as the intervention, to prevent | *Blinding of the investigator to the intervention is crucial. It is also important for the subject to be blind to both, the assignment as well as the intervention, to prevent spillovers. This is called double blinding. | ||

* | *Refer to [[Measuring Abstract Concepts|measuring abstract concepts]] and [[Questionnaire Design|questionnaire design]] for details on further concerns. | ||

[[File:Spill.png|400px|thumb|center|'''Fig.2. Spillovers''']] | [[File:Spill.png|400px|thumb|center|'''Fig.2. Spillovers''']] | ||

==Final Step: Quality Control== | ==Final Step: Quality Control== | ||

Quality control is not just a step that needs to be exercised when measuring outcomes. It is a constant, rigorous process that needs to be carried out at various stages to ensure the integrity of the evaluation. | [[Data Quality Assurance Plan|Quality control]] is not just a step that needs to be exercised when measuring outcomes. It is a constant, rigorous process that needs to be carried out at various stages to ensure the integrity of the evaluation. | ||

It includes dealing with concerns about [[Questionnaire Design|design]], measurement of outcomes, as well as handling data, and [[Personally Identifying Information (PII)|ensuring anonymity]] of subjects. | |||

===Key Concerns=== | ===Key Concerns=== | ||

*Lack of | *Lack of [[Data Quality Assurance Plan|Quality control]] can lead to erroneous conclusions, for instance, evidence of ineffective '''treatment''' even though the problem really was ineffective evaluation. | ||

*This is where training manuals can help, by setting out rigorous standards for investigators, and providing ways to enforce these standards. | *This is where training manuals can help, by setting out rigorous standards for investigators, and providing ways to enforce these standards. | ||

*Training can also include information on standardizing data | *Training can also include information on [[Standardization|standardizing [[Primary Data Collection|data collection]] and reporting. | ||

*See [https://dimewiki.worldbank.org/images/d/da/DATA_FOR_DEVELOPMENT_IMPACT_For_review.pdf. Data for Development Impact] for a more comprehensive discussion on quality control. | *See [https://dimewiki.worldbank.org/images/d/da/DATA_FOR_DEVELOPMENT_IMPACT_For_review.pdf. Data for Development Impact] for a more comprehensive discussion on '''quality control'''. | ||

==Back to Parent== | ==Back to Parent== | ||

This article is part of [[Experimental Methods]]. However, most of the principles highlighted above can be applied in general to all kinds of evaluations, and therefore act as a crucial pointer to anyone looking to foray into the world of evaluations. | This article is part of [[Experimental Methods]]. However, most of the principles highlighted above can be applied in general to all kinds of evaluations, and therefore act as a crucial pointer to anyone looking to foray into the world of evaluations. | ||

| Line 60: | Line 65: | ||

*J-PAL's [https://www.povertyactionlab.org/sites/default/files/documents/L6_Threats%20and%20Analysis_McConnell_2017.pdf Threats and Analysis] | *J-PAL's [https://www.povertyactionlab.org/sites/default/files/documents/L6_Threats%20and%20Analysis_McConnell_2017.pdf Threats and Analysis] | ||

*JM Kendall's [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1726034/ Designing a research project: randomised controlled trials and their principles] | *JM Kendall's [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1726034/ Designing a research project: randomised controlled trials and their principles] | ||

[[Category:Randomized_Control_Trials]] | |||

Latest revision as of 17:31, 14 August 2023

Randomized evaluations are field experiments involving the assignment of subjects randomly to one of two groups: the treatment group which receives the policy intervention being evaluated and the control group which remains untreated.

For the purpose of this article, we will rely on the example of a study designed to evaluate the effects of cash transfers on farm outputs. In this setup, members of the treatment group will receive cash transfers in addition to an information pamphlet related to cropping systems like inter-cropping, while the control group will only receive the pamphlet.

The results of such a trial are then used to answer questions about the effectiveness of an intervention, and can prevent inefficient allocation of resources to programs that might prove to be ineffective after a study on a small-scale.

Read First

- This section covers the key principles of study design to guide researchers on best-practices in conducting field evaluations. For more about evaluations, and their types, please see experimental methods and quasi-experimental methods.

- There can be various biases that can affect results of an experiment, such as selection bias, or recall bias.

- We will also look at ways of tackling these through efficient study design.

Step 1: Comprehensive protocol for the evaluation

The first step involves selecting a hypothesis (assumption) that specifies the anticipated link between the predictor variables and the outcomes, that is, the null hypothesis.

In the cash transfer experiment mentioned at the beginning, this would involve laying out the hypothesis that cash transfers do indeed have an effect on farm output. We would also need to lay out the target population, in terms of geographical coverage, and perhaps an exclusion criteria, limiting the study to only include farmers with annual incomes under $1000.

Key Concerns

- The sample to be studied must be clearly specified, including exclusion and inclusion criteria.

- Pilot studies can help identify ideal target population, as well as ascertain take-up rates, that can help with sample size and power calculations.

- The sample size must be selected in a manner that provides a high probability of detecting a significant effect.

- A good study can be designed by consulting experienced researchers.

Step 2: Randomization

Broadly speaking, randomization involves allocating the sample selected (based on calculations in Step 1) into one of two groups: treatment and cotrol groups. This is the basis for establishing the causal effect, which is the cornerstone of a randomized evaluation. SeeRandomization in Stata for a technical explanation.

In our cash-transfer study, for instance, this would involve randomly assigning half, or close to half, of the farmers to the treatment and control groups. Alongside this process, we would also need to collect baseline data on demographics and output in previous period, in order to ensure our treatment and control groups are indeed similar in all respects except the intervention.

Key Concerns

- Effective randomization is important to tackle the issue of confounding, that is, when a characteristic is associated with the intervention as well as the outcome. For example, if younger people are less likely to experience symptoms of heart disease, and also less likely to visit their doctors for annual check-ups, then an intervention that tries to evaluate the impact of a coronary-health campaign on hospital visits is said to be confounded by age.

- This process must be concealed from the investigator. see also Research Ethics.

- Initial baseline characteristics must be measured across the two groups, and these should not be significantly different. One solution to this problem is randomization inference, a concept that is gaining ground in the field of randomized evaluations.

- Care must be taken to ensure minimum attrition, that is, dropping out of some subjects after assignment.

- Regardless, outcomes should still be compared against initial members of the control group - this is called intention-to-treat.

Step 3: Intervention, followed by measuring the outcomes

The next step is to apply the intervention, and then measure outcomes, called endline characteristics, after the pre-determined time-period has passed since the intervention.

In our cash-transfer example, this would involve providing cash transfers to the treatment group while ensuring that the control group members do not receive them. After the intervention timeline is completed, we will collect data on farm outputs again for the two groups and compare.

Key Concerns

- Sufficient time should be given for the intervention to have its intended effect. Premature calculation of outcomes can indirectly affect the power of the evaluation by affecting the minimum detectable effect size (MDES).

- Blinding of the investigator to the intervention is crucial. It is also important for the subject to be blind to both, the assignment as well as the intervention, to prevent spillovers. This is called double blinding.

- Refer to measuring abstract concepts and questionnaire design for details on further concerns.

Final Step: Quality Control

Quality control is not just a step that needs to be exercised when measuring outcomes. It is a constant, rigorous process that needs to be carried out at various stages to ensure the integrity of the evaluation.

It includes dealing with concerns about design, measurement of outcomes, as well as handling data, and ensuring anonymity of subjects.

Key Concerns

- Lack of Quality control can lead to erroneous conclusions, for instance, evidence of ineffective treatment even though the problem really was ineffective evaluation.

- This is where training manuals can help, by setting out rigorous standards for investigators, and providing ways to enforce these standards.

- Training can also include information on [[Standardization|standardizing data collection and reporting.

- See Data for Development Impact for a more comprehensive discussion on quality control.

Back to Parent

This article is part of Experimental Methods. However, most of the principles highlighted above can be applied in general to all kinds of evaluations, and therefore act as a crucial pointer to anyone looking to foray into the world of evaluations.