Difference between revisions of "Master Do-files"

Kbjarkefur (talk | contribs) |

Kbjarkefur (talk | contribs) |

||

| Line 91: | Line 91: | ||

There is a way to change this code so that Stata detects which user is running the code so that no manual change has to be done when switching between users. You would do this by using Stata's built in local <code>c(username)</code> that reads the username assigned to your computer during the installation of your operating system (Windows etc.). You do this by changing <code>if $user == 1 {</code> to <code> if c(username) == "your_username" {</code> for each user. You still have to add new users manually | There is a way to change this code so that Stata detects which user is running the code so that no manual change has to be done when switching between users. You would do this by using Stata's built in local <code>c(username)</code> that reads the username assigned to your computer during the installation of your operating system (Windows etc.). You do this by changing <code>if $user == 1 {</code> to <code> if c(username) == "your_username" {</code> for each user. You still have to add new users manually | ||

=== Project folder globals === | |||

<pre> | |||

* Project folder globals | |||

* --------------------- | |||

global dataWorkFolder "$projectfolder/DataWork" | |||

global baseline "$projectfolder/DataWork" | |||

global endline "$projectfolder/DataWork" | |||

</pre> | |||

==== Standardization of Units and Assumptions ==== | ==== Standardization of Units and Assumptions ==== | ||

Revision as of 13:19, 30 November 2017

The master do-file is the main do file that is used to call upon all the other do files. By running this file, all files needed from importing raw data to cleaning, constructing, analysing and outputting results should be run. This file therefore also functions as a map to the data folder.

Read First

- The command iefolder sets up the master do-file according to the description in this article for you.

- The person creating the master do-file should be able to run the do-files from all stages (cleaning, construct, analysis, exporting tables, etc) from the master do-file and the anyone else should be able to run all of those stages and get the exact same results by only running the master do-file after changing the path global to the location where the project is stored on their computer.

Purpose of a Master Do-File

A master do-file has the following three purposes:

- The first reason is that it possible to run all code related to a project by running only one dofile. This is incredible important for replicability.

- The second purpose is to set up globals. The most important type of global to be set up is folder paths that enables dynamic file paths that in turn allows multiple users run the same code, it shortens the file paths as well as making it possible to move files and folders with minimal updates to the code. Standardized conversion rates are another example of global.

- The third purpose is that this file is the main map to the DataWork folder.

Each of these purposes are described in more detail below.

Purpose 1. Run do-files needed for the data work

As projects grow large it is impractical, if not impossible, to write all code in a single do-file. Commonly the code is separated into one file per high level task, i.e. cleaning, analysis etc. But given the complexity of today's data work, even the code needed for a high level tasks such as cleaning or analysis is often too long to practically fit in one do-file. Therefore, a typical project have a large number of do-files. It would be impossible to run all of these files manually without a great risk of making errors, but if a master do-file is used, that is no longer an issue. Using Stata's do command a do-file can run other do-files. In a master do-file you will find a section where other do-files needed for the project are ran. And typically you will have multiple master do-files. For example, you have one for each data collection, baseline, endline etc. that runs the files needed for all the data work for that round. Then you will have a project master do-files that runs the round master do-files in the correct order, and each round master do-file run the code needed for that round in the correct order etc. This way, you can run all code related to the project with a single click.

Purpose 2. Set globals

In the master do-file we store a wide variety of information in globals that we want to make sure is used exactly the same across the project. Here are some of the most common types of globals set up in the master do-file, but any project could have unique types of globals.

File paths. Most projects use a shared folder service like DropBox, Box, OneDrive, Google Drive etc. All collaborators on a project are likely to have slightly different file path to these shared folders, for example, "C:/Users/JohnSmith/Dropbox" or "C:/Users/AnnDoe/Dropbox". We can use global to get dynamic file paths in order for collaborators being able to use the same do-file. We can also use globals to shorten the file paths used in the code. Also, if someone would move where they have their Dropbox, they would only have to update this one global instead of every file reference in all do-files.

Conversion rates. Another common usage of globals is to standardize conversion rates. If you, for example, need to convert amounts between currencies in your code, then you should have a global where you store that conversion rate, and each time you convert an amount you reference the global instead of typing it out each time you need the currency exchange rate. This practice reduce the risk for typos, and it reduces the risk of different conversion rates from different sources or points in time are used in the project. It also makes updates very easy, as you only need to update the global at one location and then run master do-file to update all the analysis. You should use this for standardized conversion for length, weight, volume etc., as well as for your best estimation for local non-standardized conversion rates.

Control Variables. If a project has a set of control variables that needs to be easily updated across a project can store these in a global here, and in each regression that list of control variables is used, the global should be referenced. And if the global needs to be updated by, for example, adding one variable, then that is done in the master do-file, and then all the outputs are updated with the new list next time someone runs the master do-file.

Purpose 3. Map to DataWork Folder

Since all code can be run from this file, all important folders are pointed to with globals, and since all outputs are (indirectly) created by this file, this file is the starting point to find where any do-file, data set or output is located in the DataWork folder. Another examples of files that help with the navigation of the folder could be a Word document or a PDF describing how to navigate the sub-folders. Such files are not included in our folder template, but may sometimes be a good addition. However, those files needs to be updated in parallel which often does not happen even if that is the intention.

Components of a Master Do file

Since the master do file acts as a map to all the other do files in the project, it is important that the do file is organized and contains all the information necessary during the analysis. Some of the necessary components of a do file are as follows:

Intro Header

The intro header should contain some descriptive information about the do file such that somebody who doesn't know the do file can read it and understand what the do file does and what it produces. Some examples of information to put on the header are the purpose of the do file, the outline of the do file, the data files required to run the do file correctly, the data files created by the do file, the name variable that uniquely identifies the unit of observation in the datasets, etc.

Installing user written commands and set the same settings for all users

After the intro header, settings that are used throughout the project should also be declared in the master do-file. This section is important as different settings for different users could results in users getting different results.

Installing ietoolkit and other user written commands

Master do-files created by iefolder includes a line where the package ietoolkit is installed. After this line you can add other commands needed in the project. It is common that these lines are commented out as each of these installations takes a little while, but it is important for replicability that these lines are here, commented out or not. In the end this sections will look something similar to:

*Install all packages that this project requires:

ssc install ietoolkit, replace

ssc install outreg2 , replace

ssc install estout , replace

ssc install ivreg2 , replace

The replace makes sure that the latest version of the command with updated functionalities is installed if any previous versions have already been installed on the computer.

Harmonize settings for all users

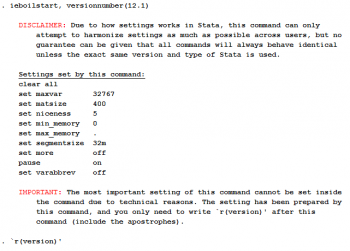

Stata allows the user to customize a larger number of settings. These settings include Stata version, memory settings, code interpretation settings etc. If two users with different settings run the same code it could be the case that the code does not run the same way for both users. Most of those cases it only means that the code crashes for one of the users, but in some cases, the code might run for both users, but they end up with different results. We have created the command ieboilstart that harmonize these settings across users. We set the settings to the values recommended by Stata, but in most cases it does not matter what values are used, as long as all users use the same value. And ieboilstart does that for you. You use ieboilstart like this:

*Standardize settings accross users

ieboilstart, version(12.1) //Set the version number to the oldest version used by anyone in the project team

`r(version)' //This line is needed to actually set the version from the command above

The arguably most important setting is the version setting. There is no recommended value for this from Stata so that is the only setting you need to specify manually. We recommend using the oldest version it is likely that anyone from your team will ever use. Once you have done a randomization that is meant to be replicable in your project, you must not change this value, or your replication would no longer be replicable. Read the help file for ieboilstart for a more detailed description of the settings the command sets for you.

Root folder globals

The root folder globals indicate where each user have the project folder on her/his computer. This allows multiple users to run the same do-files by only doing a very minor modification. In the code below the the global user is set to 1, meaning that Stata will use Ann's folder location. If John would like to run the code he would change the user number to 2, and if all file references in all do-files use these globals to dynamically create the file location instead of typing it out literally each time, John can now run all code. If a third user wants to run the same code, that user would add the same information and use user number 3.

*User Number:

* Ann 1

* John 2

* Add more users here as needed

*Set this value to the user currently using this file

global user 1

* Root folder globals

* ---------------------

if $user == 1 {

global projectfolder "C:/Users/AnnDoe/Dropbox/Project ABC"

}

if $user == 2 {

global projectfolder "C:/Users/JohnSmith/Dropbox/Project ABC"

}

There is a way to change this code so that Stata detects which user is running the code so that no manual change has to be done when switching between users. You would do this by using Stata's built in local c(username) that reads the username assigned to your computer during the installation of your operating system (Windows etc.). You do this by changing if $user == 1 { to if c(username) == "your_username" { for each user. You still have to add new users manually

Project folder globals

* Project folder globals * --------------------- global dataWorkFolder "$projectfolder/DataWork" global baseline "$projectfolder/DataWork" global endline "$projectfolder/DataWork"

Standardization of Units and Assumptions

Conversion rates for standardization of units and assumptions that need to be defined should be defined as globals in the master do-files. Varlist commonly used across the projects are also defined using globals/locals in the master do file. Since, globals defined in one do file also work on other do files throughout a Stata session, it is important to declare all the global variables necessary during the project on the master do-file.

Sub Master do-file(s)

Sub Master do-files are similar to a Master do-file except they perform a singular function, whereas the Master do-file runs all the necessary do-files from the raw data stage to the analysis and output stage. A sub Master-do file could be a do-file that runs all the do-files and commands used to generate all the graphs produced for a project. Instead of including each do-file that was used to produce the graphs needed for a project in the Master do-file, one could create a sub Master-do file for graphs outputs that will be called by the Master do-file. Following this technique one could have a sub Master do-file for graphs outputs, regressions, and data cleaning; all of which will be called upon by the Master do-file.

Implementation

DIME's Stata command ieboilstart from the ietoolkit package declares all the necessary basic settings to standardize the code across multiple people working on the same project. This can be done adding the following 2 lines of code to every do-files.

ssc install ietoolkit, replace

ieboilstart, versionnumber(version_number) options

`r(version)'

Declaring these commands at the top of do file used by every member of the project ensures that the version settings are the same across all runs for the project. However, the globals and any extra commands installed should be declared as well.

Back to Parent

This article is part of the topic Data Management