Difference between revisions of "Data Management"

Mrijanrimal (talk | contribs) |

|||

| (68 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

Due to the long life span of a typical impact evaluation, | <onlyinclude> | ||

Due to the long life span of a typical impact evaluation, multiple generations of team members often contribute to the same data work. Clear methods for organization of the data folder, the structure of the data sets in the folder, and identification of the observations in the data sets is critical. | |||

</onlyinclude> | |||

== Read First == | == Read First == | ||

* | * An important step before starting with '''data management''' is creating a [[Data Map|data map]]. | ||

* Always create master data sets for | * The data folder structure suggested here can easily be set up with the command [[iefolder]] in the package [[Stata_Coding_Practices#ietoolkit|ietoolkit]] | ||

* A dataset should always have one [[ID Variable Properties|uniquely identifying variable]]. If you receive a data set without an ID, the first thing you need to do is to create individual IDs. | |||

* Always create [[Master Data Set|master data sets]] for each unit of observations relevant to the analysis. | |||

* | * Never merge on variables that are not ID variables unless one of the data sets merged is the Master data. | ||

==Organization of Project folder== | ==Organization of Project folder== | ||

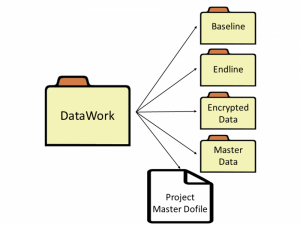

[[File: | [[File:Datawork_new.png|thumb |Example of a data folder structure used during the course of an impact evaluation project.]] | ||

A well-organized [[DataWork Folder|data-folder]] is essential to productive workflows for the whole [[Impact Evaluation Team]]. It can't be stressed enough that this is one of the most important steps for the productivity of this project team and for reducing the sources of error in the data work. There is no universal best way to organize a project folder, what's most important is that all projects carefully plan ahead when setting up a new project folder. It is a good idea to start with a project folder template. Below is a detailed description of DIME's Folder Standard. | |||

[[ | |||

=== DIME Folder Standard === | |||

At DIME we have a [[DataWork Folder|standardized folder template]] for how to organize our data work folders. Some projects have unique folder requirements but we still recommend all projects to use this template as a starting point. We have published a command called [[iefolder]] in the package [[Stata Coding Practices#ietoolkit|ietoolkit]] available through SSC that sets up this folder structure for you. The paragraph below has a short summary of the data folder organization. Click the links for details. | |||

Most projects have a shared folder, for example using Box or DropBox. The project folder typically has several subfolders, including: government communications, budget, impact evaluation design, presentations, etc. But there should also be one folder for all the data work, that we in our standard template call '''DataWork'''. All data-related work on this project should be stored in '''DataWork'''. | |||

When setting up the [[DataWork Folder|DataWork folder structure]] for the first time, it must be done carefully and planned well so that it will not cause problem as the project evolve. We have based DIME's folder structure template on best practices developed at DIME that will help avoid those problems. A data folder should also include a [[Master Do-files | master do-file]] which run all other do-files, and also serve as a map to navigate the data folder. The project should also have clear [[Naming Conventions | naming conventions]]. This might sound more difficult than it is, but this process is easy if you use [[iefolder]]. | |||

==Master data sets== | ==Master data sets== | ||

With multiple rounds of data, you need to ensure there are no discrepancies on how observations are identified across survey rounds. Best practice is to have one datafile that overviews the observations, typically called a [[Master Data Set|master data set]]. For each [[Unit of Observation|unit of observation]] relevant to the analysis (survey respondent, unit of randomization, etc) we need a master data set. Common master data sets are household master data set, village master data set, clinic master data set etc. | |||

These master data sets should include time-invariant information, for example ID variables and dummy variables indicating treatment status, to easily merge across data sets. We want this data for all observations we encountered, even observations that we did not select during sampling. This is a small but important point, read [[Master Data Set|master data set]] for more details. | |||

== ID Variables == | |||

All datasets must be uniquely and fully identified - see [[ID Variable Properties|properties of an ID variable]] for details. In almost all cases the ID variable should be a single variable. One common exception is for panel data sets, where each observation is identified by the primary ID variable and a time variable (year one, year two etc.). As soon as a dataset has one numeric, unique, fully-identifying variable, [[De-identification|separate out all personal identifier information (PII)]] from the data set. The PII should be encrypted, and saved separately in a secure location. | |||

== Git and GitHub == | |||

Git is a tool used extensively in the world of computer science in order to manage code work. It is especially good for collaboration but it is also very helpful in seingle-person projects. In the early days of Git you had to manage your own code repository through the command line and set up your own servers to share the code, but several cloud based solutions with non-technical intercases exist now and [https://github.com GitHub] is the most commonly used one within the research community. Other commonly used Git implementations are [https://about.gitlab.com GitLab] and [https://bitbucket.org Bitbucket]. Since they all build on Git they share most features and if you learn one of them your skills are transferable to another. | |||

GitHub has tools that offer less technical alternatives to how to interact with the Git functionality and that is probably the reason why GitHub is the most popular Git implementation in the research community. The main drawback with GitHub is that you cannot create private code repositories using the free account (but you can be invited to them). GitLab, for example, allows you to create private repositories on a free account, but you must use the command line to contribute to code. We have created resources and provide link to additional external resources for GitHub on our [[Getting started with GitHub]] page. | |||

== Additional Resources == | == Additional Resources == | ||

* | * Innovations for Poverty Action, [http://www.poverty-action.org/publication/ipas-best-practices-data-and-code-management| Best practices on data and code management from Innovations for Poverty Action] | ||

*DIME Analytics (World Bank), [https://osf.io/mw965 Data Management] | |||

* DIME Analytics (World Bank), [https://osf.io/yqhk3 Data Cleaning] | |||

*DIME Analytics (World Bank), [https://osf.io/wd5ah Frequent Reproducibility Mistakes in Stata and How to Avoid Them] | |||

*DIME Analytics (World Bank), [https://osf.io/phz37 How To: DIME Tools and Protocols for Reproducible Research] | |||

[[Category: Data_Management ]] | [[Category: Data_Management ]] | ||

[[Category: Data Analysis ]] | |||

Latest revision as of 16:17, 26 June 2023

Due to the long life span of a typical impact evaluation, multiple generations of team members often contribute to the same data work. Clear methods for organization of the data folder, the structure of the data sets in the folder, and identification of the observations in the data sets is critical.

Read First

- An important step before starting with data management is creating a data map.

- The data folder structure suggested here can easily be set up with the command iefolder in the package ietoolkit

- A dataset should always have one uniquely identifying variable. If you receive a data set without an ID, the first thing you need to do is to create individual IDs.

- Always create master data sets for each unit of observations relevant to the analysis.

- Never merge on variables that are not ID variables unless one of the data sets merged is the Master data.

Organization of Project folder

A well-organized data-folder is essential to productive workflows for the whole Impact Evaluation Team. It can't be stressed enough that this is one of the most important steps for the productivity of this project team and for reducing the sources of error in the data work. There is no universal best way to organize a project folder, what's most important is that all projects carefully plan ahead when setting up a new project folder. It is a good idea to start with a project folder template. Below is a detailed description of DIME's Folder Standard.

DIME Folder Standard

At DIME we have a standardized folder template for how to organize our data work folders. Some projects have unique folder requirements but we still recommend all projects to use this template as a starting point. We have published a command called iefolder in the package ietoolkit available through SSC that sets up this folder structure for you. The paragraph below has a short summary of the data folder organization. Click the links for details.

Most projects have a shared folder, for example using Box or DropBox. The project folder typically has several subfolders, including: government communications, budget, impact evaluation design, presentations, etc. But there should also be one folder for all the data work, that we in our standard template call DataWork. All data-related work on this project should be stored in DataWork.

When setting up the DataWork folder structure for the first time, it must be done carefully and planned well so that it will not cause problem as the project evolve. We have based DIME's folder structure template on best practices developed at DIME that will help avoid those problems. A data folder should also include a master do-file which run all other do-files, and also serve as a map to navigate the data folder. The project should also have clear naming conventions. This might sound more difficult than it is, but this process is easy if you use iefolder.

Master data sets

With multiple rounds of data, you need to ensure there are no discrepancies on how observations are identified across survey rounds. Best practice is to have one datafile that overviews the observations, typically called a master data set. For each unit of observation relevant to the analysis (survey respondent, unit of randomization, etc) we need a master data set. Common master data sets are household master data set, village master data set, clinic master data set etc.

These master data sets should include time-invariant information, for example ID variables and dummy variables indicating treatment status, to easily merge across data sets. We want this data for all observations we encountered, even observations that we did not select during sampling. This is a small but important point, read master data set for more details.

ID Variables

All datasets must be uniquely and fully identified - see properties of an ID variable for details. In almost all cases the ID variable should be a single variable. One common exception is for panel data sets, where each observation is identified by the primary ID variable and a time variable (year one, year two etc.). As soon as a dataset has one numeric, unique, fully-identifying variable, separate out all personal identifier information (PII) from the data set. The PII should be encrypted, and saved separately in a secure location.

Git and GitHub

Git is a tool used extensively in the world of computer science in order to manage code work. It is especially good for collaboration but it is also very helpful in seingle-person projects. In the early days of Git you had to manage your own code repository through the command line and set up your own servers to share the code, but several cloud based solutions with non-technical intercases exist now and GitHub is the most commonly used one within the research community. Other commonly used Git implementations are GitLab and Bitbucket. Since they all build on Git they share most features and if you learn one of them your skills are transferable to another.

GitHub has tools that offer less technical alternatives to how to interact with the Git functionality and that is probably the reason why GitHub is the most popular Git implementation in the research community. The main drawback with GitHub is that you cannot create private code repositories using the free account (but you can be invited to them). GitLab, for example, allows you to create private repositories on a free account, but you must use the command line to contribute to code. We have created resources and provide link to additional external resources for GitHub on our Getting started with GitHub page.

Additional Resources

- Innovations for Poverty Action, Best practices on data and code management from Innovations for Poverty Action

- DIME Analytics (World Bank), Data Management

- DIME Analytics (World Bank), Data Cleaning

- DIME Analytics (World Bank), Frequent Reproducibility Mistakes in Stata and How to Avoid Them

- DIME Analytics (World Bank), How To: DIME Tools and Protocols for Reproducible Research