Difference between revisions of "Monitoring Data Quality"

| (44 intermediate revisions by 2 users not shown) | |||

| Line 29: | Line 29: | ||

== Field Monitoring == | == Field Monitoring == | ||

As part of '''monitoring data quality''' in the field, the '''field team''' should also set up a mechanism for '''monitoring''' various aspects of the [[Primary Data Collection|data collection]]. There should be a clear understanding of the team structure, and every member of the field team should know their role in the team. '''Field monitoring''' has the following components: | As part of '''monitoring data quality''' in the field, the '''field team''' should also set up a mechanism for '''monitoring''' various aspects of the [[Primary Data Collection|data collection]]. There should be a clear understanding of the team structure, and every member of the field team should know their role in the team. '''Field monitoring''' has the following components: | ||

* ''' | * '''Field coordinator''' | ||

* ''' | * '''Supervisors''' | ||

* '''Survey progress''' | * '''Survey progress''' | ||

* ''' | * '''Data quality checkers''' | ||

* '''Review completed forms''' | * '''Review completed forms''' | ||

=== Monitoring | === Monitoring: Field coordinators === | ||

The role of the [[Impact Evaluation Team#Field Coordinators (FCs)|field coordinator (FC)]] is to carefully oversee the work of the '''field teams''', and monitor them in the following ways: | The role of the [[Impact Evaluation Team#Field Coordinators (FCs)|field coordinator (FC)]] is to carefully oversee the work of the '''field teams''', and monitor them in the following ways: | ||

* '''Regular | * '''Regular feedback:''' Provide useful feedback after, and not during, the pre-decided number of interviews are completed for the day. | ||

* '''Clear understanding of questions:''' From the beginning of the '''data collection''', regularly check-in with the field team to see if questions are understood correctly by the '''respondents'''. In this case, proper and regular communication with the field team is very important. | * '''Clear understanding of questions:''' From the beginning of the '''data collection''', regularly check-in with the field team to see if questions are understood correctly by the '''respondents'''. In this case, proper and regular communication with the field team is very important. | ||

* '''Reporting system:''' Ensure that proper reporting systems are set up, and that the '''supervisors''' and '''data quality checkers''' clearly understand the system. Ensure that all members know who their first '''point-of-contact''' is in the team. | * '''Reporting system:''' Ensure that proper reporting systems are set up, and that the '''supervisors''' and '''data quality checkers''' clearly understand the system. Ensure that all members know who their first '''point-of-contact''' is in the team. | ||

* '''Staff motivation:''' Maintain a good relationship with everyone involved in the data collection, and keep the team motivated. | * '''Staff motivation:''' Maintain a good relationship with everyone involved in the data collection, and keep the team motivated. | ||

=== Monitoring: Supervisors === | |||

=== Monitoring | |||

'''Supervisors''' are experienced '''enumerators''' who play an important role in monitoring the work of the enumerators. As part of this process, they perform the following tasks: | '''Supervisors''' are experienced '''enumerators''' who play an important role in monitoring the work of the enumerators. As part of this process, they perform the following tasks: | ||

* '''Logistics:''' They organize and finalize various '''logistics''' of the data collection process, such as work plan, transport, equipment, and accommodation for the '''field staff'''. | * '''Logistics:''' They organize and finalize various '''logistics''' of the data collection process, such as work plan, transport, equipment, and accommodation for the '''field staff'''. | ||

* '''Link with local authorities:''' By introducing '''enumerators''' to local authorities, they act as a link between the enumerators and local authorities. This also improves the quality of data by establishing a comfortable working relationship between different groups involved in the data collection. | * '''Link with local authorities:''' By introducing '''enumerators''' to local authorities, they act as a link between the enumerators and local authorities. This also improves the quality of data by establishing a comfortable working relationship between different groups involved in the data collection. | ||

* '''Monitor enumerators:''' They monitor the work of the '''enumerators''' by observing interviews, and helping them improve their interviewing skills. | * '''Monitor enumerators:''' They monitor the work of the '''enumerators''' by observing interviews, and helping them improve their interviewing skills. '''Supervisors''' can also write their observations about each enumerator on an '''enumerator observation form''', and share it with the '''field coordinator'''. '''Figure 2''' below gives an example of such a form. | ||

[[File:Enumeratorform.png|450px|thumb|center|'''Fig.2 : Enumerator observation form''']] | [[File:Enumeratorform.png|450px|thumb|center|'''Fig.2 : Enumerator observation form''']] | ||

=== Monitoring: Survey progress === | |||

It is also important to track the progress of the [[Field Surveys|survey]]. The sign of a good '''reporting system''' is that the list of people who have interviewed matches the list of '''respondents''' who were originally selected for the interviews. In some cases, '''enumerators''' forget to share [[Questionnaire Design|survey forms]], or do not complete the '''survey forms''', or the '''field team''' forgets to replace '''respondents''' (in cases where they were not available). Missing data can affect the [[Data Quality Assurance Plan|data quality]], which can then affect the conclusions of an impact evaluation. Therefore, the '''field team''' should take the following steps: | |||

* '''Good reporting system:''' Create a good reporting system that involves regular sharing of completed '''survey forms'''. Compare the list of completed survey forms with the information on '''respondents''' who were selected for participation in the '''survey'''. | |||

* '''Updated list of respondents:''' The '''supervisor''' should always keep an updated list of '''respondents'''. | |||

* '''Survey log:''' At the same time, the '''field coordinator (FC)''' should keep a '''survey log''' which contains a list of completed survey forms. Do not share this log with '''supervisors'''. | |||

=== Monitoring: Data quality checkers === | |||

'''Data quality checkers''' perform regular checks, such as [[Back Checks|back checks]], and [[High Frequency Checks|high frequency checks]], on completed [[Questionnaire Design|survey forms]] shared by '''enumerators'''. It is important to monitor the data quality checkers in the following ways: | |||

* '''Report concerns:''' Report any concerns about [[Data Quality Assurance Plan|data quality]] to the data quality checkers, for instance, errors and inconsistencies found in the data. | |||

* '''Peer review:''' Ideally, there should be a separate team of data quality checkers and auditors. This team should edit and review the '''survey forms''' before they are submitted. They can also analyze the errors and correction sheets that are shared by the data quality checkers. Use this team to perform '''spot checks''' and [[Back Checks|back checks]], and observe interviews. | |||

* '''Dashboard:''' Set up a dashboard with results based on '''data checks'''. This creates a continuous feedback process for '''enumerators''', and improves their accountability. Further, by making the process more transparent, it boosts motivation of the enumerators. | |||

=== Monitoring: Review completed forms === | |||

Reviewing completed [[Questionnaire Design|survey forms]] is another crucial part of [[Data Quality Assurance Plan|improving data quality]]. The '''supervisors''', '''enumerators''', or even '''data quality checkers''' can conduct the '''form review'''. Follow these guidelines for this step: | |||

* '''SurveyCTO forms:''' If the '''survey form''' was [[SurveyCTO Programming|programmed in SurveyCTO]], the '''"Go to prompt"''' option can list all the questions of the form on a tablet. | |||

* '''Prioritize:''' Do not review all questions. Identify and prioritize key questions. For example, questions that determine the number of '''repeat groups''', like '''household size''', '''number of plots''', '''number of crops''', and so on. | |||

* '''Structure:''' In this step, also review the [[Questionnaire Design#Draft Instrument|structure of the form]]. Often, key questions can be found within '''nested repeat groups'''. For example, crop sales for a plot in a particular season of the year. If the '''form structure''' is too complex, it becomes hard for '''supervisors''' to locate key questions, so flag these concerns during this step. | |||

* <code>[[iefieldkit]]</code>: [https://www.worldbank.org/en/research/dime/data-and-analytics DIME Analytics] has also created the Stata package, <code>iefieldkit</code>. This package allows members of the field team who do not specialize in [[Software Tools#Statistical Software|code tools]] to understand and review the various tasks involved in [[Data Management|data management]] and [[Data Cleaning|data cleaning]]. The following two commands in <code>iefieldkit</code> are specifically designed to field teams to test for, and resolve duplicate entries in the dataset: | |||

** <code>[[ieduplicates]]</code>: Identifies duplicate values. | |||

** <code>[[iecompdup]]</code>: Resolves duplicate values. | |||

* | |||

'''Note:''' Duplicate values can bias '''data quality checks''' like [[Back Checks|back checks]] and [[High Frequency Checks|high frequency checks (HFCs)]]. Therefore, resolve duplicate values before the survey forms are sent for these checks. | |||

== Minimizing Attrition == | == Minimizing Attrition == | ||

'''Attrition''' occurs when some study participants leave in the middle of an impact evaluation. '''Attrition rate''' is the percentage of participants who leave the study. Impact evaluations often involve [[Data Analysis|data analysis]] based on both, '''baseline'''(first round) and '''follow-up''' (second round) [[Field Surveys|surveys]]. In general, if more than 5% of the participants leave, it can have a negative impact on [[Data Quality Assurance Plan|data quality]]. Further, if '''attrition rates''' differ across the '''treatment''' and '''control''' groups, it can bias the data in '''follow-up''' rounds of [[Primary Data Collection|data collection]]. '''Figure 3''' below explains this with the help of a numerical example. | |||

[[File:Attrition.png|400px|thumb|center|'''Fig.3. Attrition''']] | |||

The following table highlights some of the reasons for '''attrition''', and the steps that the '''field team''' can take to resolve this problem: | |||

{| class="wikitable" style="margin-left: auto; margin-right: auto; border: none;" | |||

!style="width:250px; text-align:center;"| Cause | |||

!style="width:300px; text-align:center;"| Solution | |||

|- | |||

|style="text-align:center;"| Respondent moved away from the location of the study | |||

|style="text-align:left;"| Make a plan in advance for adding respondents to the [[Sampling|sample]]. Include the cost for this in the [[Survey Budget|budget]]. | |||

|- | |||

|style="text-align:center;"| Respondent cannot be found | |||

|style="text-align:left;"| Record as much identifying information as possible during the '''baseline''' (first round) [[Field Surveys|survey]] - address, phone number, etc. | |||

Use GPS coordinates to find their location as per the '''baseline''' data. | |||

Ask nearby respondents and neighbors if they know the person. | |||

|- | |||

|style="text-align:center;"| Respondent refuses to participate | |||

|style="text-align:left;"| Provide an [[Informed Consent|informed consent form]] to assure respondent that all information will be [[Research Ethics#Confidentiality|confidential]]. | |||

Discuss and use other cooperation techniques, such as providing multiple time slots to the respondent, and adjusting according to their convenience. | |||

= | Consider other incentives, such as gift cards, or sharing information about possible benefits from participation. | ||

the | |- | ||

|style="text-align:center;"| In some cases, the respondent with the incorrect ID is interviewed | |||

|style="text-align:left;"| Ensure that all IDs match, based on the '''baseline''' data. | |||

Load the IDs in advance, and [[Questionnaire Programming|code the questionnaire]] to display a pop-up message. For example: '''"You are about to interview XYZ. Is this correct? Yes/No. If this is not correct, please go back and select the correct ID."''' | |||

|} | |||

== | == High Frequency Checks (HFCs) == | ||

Before starting with [[Primary Data Collection|data collection]], the [[Impact Evaluation Team|research team]] should work with the '''field team''' to design and code [[High Frequency Checks|high frequency checks]], as part of the [[Data Quality Assurance Plan|data quality assurance plan]]. The best time to design and code these '''high frequency checks''' is in parallel to the process of [[Questionnaire Design | questionnaire design]] and [[Questionnaire Programming | programming]]. These checks should be run daily, to provide real-time information to the '''field team''' and '''research team''' for all [[Field Surveys|surveys]]. They include the following types of checks, depending on the source of error: | |||

* '''Response quality checks.''' | |||

* '''Enumerator checks.''' | |||

* '''Programming checks.''' | |||

* '''Duplicates and survey log checks.''' | |||

* '''Other checks specific to the project or type of data.''' | |||

== | == Back Checks == | ||

[[Back Checks|Back checks]] allow the '''field team''' to verify the correctness and [[Data Quality Assurance Plan|quality]] of [[Primary Data Collection|survey data]]. In a '''back check survey''', an experienced '''enumerator''' visits the same '''respondent''' around 10-15 minutes after the actual [[Field Surveys|interview]], and asks selected questions again. The answers from this '''back check survey''' are then compared with answers from the original survey. Keep the following things in mind about '''back checks''': | |||

* '''Methodology.''' Make sure every enumerator and every field team is '''back-checked''' as soon as possible. As a general rule, '''back-check''' 10-20% of the [[Sampling|sample]], with 20% of the '''back checks''' in the first two weeks of [[Field Surveys|fieldwork]]. | |||

* '''Selection of respondents.''' Use a random sub-sample, and select the sub-sample in advance if possible. For instance, on the basis of data from a [[Survey Pilot|survey pilot]], or a '''baseline''' (first round) survey. If some enumerators are suspected of cheating, '''back-check''' their interviews too. If [[High Frequency Checks|other quality tests]] identified errors in some [[Unit of Observation|observations]], '''back-check''' these observations to resolve these errors. | |||

* | |||

* '''Categorize.''' Divide the questions for the [[Back Checks#Designing the Back Check Survey|back check survey]] into the following different categories for the '''back checks''': | |||

** '''Straightforward questions''' where there is very little possibility of error. For example, '''age''' and '''education'''. If there is an error in these variables, it means there is a serious problem with enumerator, or with the questions. | |||

** '''Sensitive or calculation-based questions''' where a more experienced enumerator will get the correct answer. For example, questions based on sensitive topics, or questions that involve calculations. If there is an error in these variables, there might be a need to provide further [[Enumerator Training|training for enumerators]]. | |||

** '''Specific questions''' about the [[Questionnaire Design|survey instrument]]. Errors in this case provide feedback which can help improve the '''survey instrument''' itself. | |||

* '''Resources.''' <code>[https://github.com/PovertyAction/bcstats bcstats]</code> is a Stata package by [https://www.povertyactionlab.org/ J-PAL] and [https://www.poverty-action.org/ IPA] that automatically compares data from '''back check surveys''' and the main survey. It also provides an outline for [[Data Analysis|analysis]] of this data. | |||

== | == Data Quality Checks for Remote Surveys == | ||

In the case of [[Remote Surveys|remote surveys]], '''monitoring data quality''' becomes even more important. Poor quality data in '''remote surveys''' can at best reduce the effectiveness of a policy intervention, and at worst require a repeat of the entire process of [[Primary Data Collection|data collection]]. Given below are some possible steps to conduct data quality checks for remote surveys. | |||

=== Random audio audits === | |||

'''Random audio audits''' consist of recording random portions of an interview. They allow the [[Impact Evaluation Team|research team]] to assess the following for a [[Computer-Assisted Personal Interviews (CAPI)|CAPI]] or [[Phone Surveys (CATI)|phone survey]]: | |||

* '''Status:''' That is, if the interview actually took place. | |||

* | * '''Enumerator behaviour:''' That is, if the '''enumerator''' introduced themselves properly, if they read out the [[Informed Consent|informed consent]], and so on. | ||

* | * '''Accuracy of collected data:''' That is, if the responses match what was entered by the enumerator. | ||

* | |||

Especially in the case of '''phone surveys (CATI)''', the '''research team''' can use '''random audio audits''' as the main tool for [[Data Quality Assurance Plan|data quality assurance]]. | |||

== | === Phone-based back checks === | ||

[[Back Checks|Back Checks]] involve selecting a small sample of questions from the original survey, and then asking them at random for a few members of the sample. The research team then compares these answers with the original answers to identify gaps in [[Enumerator Training|enumerator training]] and detect enumerator fraud. For [[Phone Surveys (CATI)|phone surveys]], the research team can perform '''phone-based back checks''' along with '''random audio audits''' to ensure proper [[Data Quality Assurance Plan|data quality]]. However, these may suffer from low response rates, and there might be a [[Selection Bias|selection bias]], since only those '''respondents''' who have a phone can be '''back-checked'''. | |||

== | == Related Pages == | ||

[[Special:WhatLinksHere/Monitoring_Data_Quality|Click here for pages that link to this topic.]] | |||

== Additional Resources == | == Additional Resources == | ||

*DIME | * DIME Analytics (World Bank) [https://osf.io/t48ug Data Quality Checks] | ||

* | * DIME Analytics (World Bank), [https://osf.io/j8t5f Assuring Data Quality] | ||

*DIME | * DIME Analytics (World Bank), [https://osf.io/ek9ab/ Survey Quality Part 1] | ||

*DIME | * DIME Analytics (World Bank), [https://osf.io/4q6kb/ Survey Quality Part 2] | ||

* | * Innovation for Poverty Action, [https://github.com/PovertyAction/high-frequency-checks/wiki/Background Template for high frequency checks] | ||

* IPA-JPAL-SurveyCTO, [https://d37djvu3ytnwxt.cloudfront.net/assets/courseware/v1/30701099ebb94072fdfcf1ec96d8227a/asset-v1:MITx+JPAL102x+1T2017+type@asset+block/4.6_High_quality_data_accurate_data.pdf Collecting High Quality Data] | * IPA-JPAL-SurveyCTO, [https://d37djvu3ytnwxt.cloudfront.net/assets/courseware/v1/30701099ebb94072fdfcf1ec96d8227a/asset-v1:MITx+JPAL102x+1T2017+type@asset+block/4.6_High_quality_data_accurate_data.pdf Collecting High Quality Data] | ||

* SurveyCTO, [https://www.youtube.com/watch?v=tHb-3bnfRLo Data quality with SurveyCTO] | * SurveyCTO, [https://www.youtube.com/watch?v=tHb-3bnfRLo Data quality with SurveyCTO] | ||

* SurveyCTO, [https://docs.surveycto.com/02-designing-forms/01-core-concepts/03ze.field-types-audio-audit.html Guide to adding audio audits] | |||

* SurveyCTO, [https://www.surveycto.com/case-studies/monitoring-and-visualization/ Monitoring and Visualization] | |||

[[Category: Research Design]] | |||

Latest revision as of 18:32, 28 June 2023

Ensuring high data quality during primary data collection involves anticipating everything that can go wrong, and preparing a comprehensive data quality assurance plan to handle these issues. Monitoring data quality from the field is an important part of this broader data quality assurance plan, and involves the following - communication and reporting, field monitoring, minimizing attrition, and real-time data quality checks. Each of these steps allow the research team to identify and correct these issues by using feedback from multiple rounds of piloting, re-training enumerators accordingly, and reviewing and re-drafting protocols for efficient field management.

Read First

- This page covers the various aspects of monitoring data quality in the field, and is part of the wider data quality assurance plan.

- The research team should discuss steps for monitoring data quality as part of field management.

- The field teams, comprising field coordinators (FCs), supervisors, and field managers, should regularly monitor data quality during field data collection.

- The field teams should communicate clearly with other members of the team, as well as with enumerators, and focus on minimizing attrition.

- Attrition occurs when some study participants leave in the middle of an impact evaluation.

- Run daily data quality checks, including back checks, high frequency checks and identity checks.

- Testing for all different issues that may arise in a survey can be time-consuming. Identify and focus on the issues that are most likely to affect the results.

Communication and Reporting

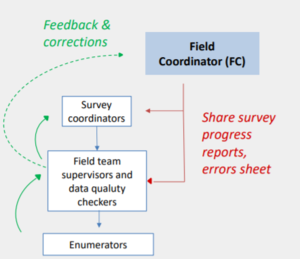

During data collection, the field teams are responsible for monitoring data quality in the field. It is therefore important to ensure proper communication, both within the team, as well as with the respondents. The structure of the field team is given below (see Figure 1):

- Field coordinators (FCs): The field coordinators (FCs) oversee the overall process of field data collection. They receive feedback from the supervisors and suggests ways to make necessary changes in the process of data collection. They also share survey progress reports and observations with supervisors.

- Survey coordinators: The survey coordinators coordinate with the supervisors and data quality checkers on a regular basis to review the quality of data that is collected.

- Data quality checkers: The data quality checkers carry out regular checks, such as back checks and high frequency checks to identify problems in the data that is shared by enumerators. If there are any issues in the collected data, they share the feedback with the survey coordinators.

- Supervisors: The supervisors monitor the work of the enumerators and communicate with them to resolve any issues that the enumerators might be facing. They share this feedback with the FCs.

- Enumerators: The enumerators are responsible for asking the questions to the respondents through interviews. These interviews can be in the form of computer-assisted personal interviews (CAPI), phone interviews (or CATI), or pen-and-paper personal interviews (PAPI).

Keep these guidelines in mind to ensure proper communication and reporting during data collection:

- Set up a good feedback mechanism. The field teams should meet at the end of everyday to share experiences and challenges faced. Use an instant-messaging platform for communication within the team. The field team can store these conversations in a shared folder online, so that everyone can revisit the discussions later.

- Account for connectivity issues. It might not always be possible to share completed survey forms, or receive feedback based on high frequency checks (HFCs). Train supervisors so they can handle most of the common issues without consulting the field coordinator (FC). However, make sure that the gap between two feedback sessions does not exceed 48 hours.

- Communicate effectively with respondents. At the same time time, train enumerators to communicate and interact with the respondent. The supervisor should be present in a few interviews to ensure that the enumerator is able to communicate all aspects of the study with the respondent, and is able to resolve all concerns of the respondent.

- Be mindful of translation issues. Incorrect translation can cause respondents to incorrectly interpret questions, which can affect the quality of data. Make use of local implementing partners for help in translating technical terms. Use recordings during the training sessions to help enumerators become comfortable with the translated version of the instrument.

Field Monitoring

As part of monitoring data quality in the field, the field team should also set up a mechanism for monitoring various aspects of the data collection. There should be a clear understanding of the team structure, and every member of the field team should know their role in the team. Field monitoring has the following components:

- Field coordinator

- Supervisors

- Survey progress

- Data quality checkers

- Review completed forms

Monitoring: Field coordinators

The role of the field coordinator (FC) is to carefully oversee the work of the field teams, and monitor them in the following ways:

- Regular feedback: Provide useful feedback after, and not during, the pre-decided number of interviews are completed for the day.

- Clear understanding of questions: From the beginning of the data collection, regularly check-in with the field team to see if questions are understood correctly by the respondents. In this case, proper and regular communication with the field team is very important.

- Reporting system: Ensure that proper reporting systems are set up, and that the supervisors and data quality checkers clearly understand the system. Ensure that all members know who their first point-of-contact is in the team.

- Staff motivation: Maintain a good relationship with everyone involved in the data collection, and keep the team motivated.

Monitoring: Supervisors

Supervisors are experienced enumerators who play an important role in monitoring the work of the enumerators. As part of this process, they perform the following tasks:

- Logistics: They organize and finalize various logistics of the data collection process, such as work plan, transport, equipment, and accommodation for the field staff.

- Link with local authorities: By introducing enumerators to local authorities, they act as a link between the enumerators and local authorities. This also improves the quality of data by establishing a comfortable working relationship between different groups involved in the data collection.

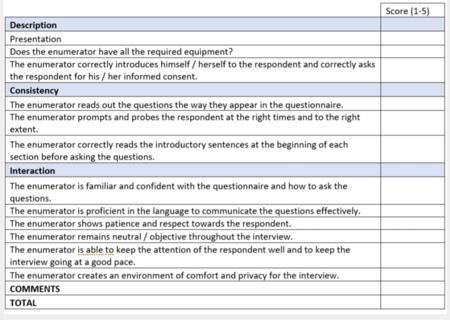

- Monitor enumerators: They monitor the work of the enumerators by observing interviews, and helping them improve their interviewing skills. Supervisors can also write their observations about each enumerator on an enumerator observation form, and share it with the field coordinator. Figure 2 below gives an example of such a form.

Monitoring: Survey progress

It is also important to track the progress of the survey. The sign of a good reporting system is that the list of people who have interviewed matches the list of respondents who were originally selected for the interviews. In some cases, enumerators forget to share survey forms, or do not complete the survey forms, or the field team forgets to replace respondents (in cases where they were not available). Missing data can affect the data quality, which can then affect the conclusions of an impact evaluation. Therefore, the field team should take the following steps:

- Good reporting system: Create a good reporting system that involves regular sharing of completed survey forms. Compare the list of completed survey forms with the information on respondents who were selected for participation in the survey.

- Updated list of respondents: The supervisor should always keep an updated list of respondents.

- Survey log: At the same time, the field coordinator (FC) should keep a survey log which contains a list of completed survey forms. Do not share this log with supervisors.

Monitoring: Data quality checkers

Data quality checkers perform regular checks, such as back checks, and high frequency checks, on completed survey forms shared by enumerators. It is important to monitor the data quality checkers in the following ways:

- Report concerns: Report any concerns about data quality to the data quality checkers, for instance, errors and inconsistencies found in the data.

- Peer review: Ideally, there should be a separate team of data quality checkers and auditors. This team should edit and review the survey forms before they are submitted. They can also analyze the errors and correction sheets that are shared by the data quality checkers. Use this team to perform spot checks and back checks, and observe interviews.

- Dashboard: Set up a dashboard with results based on data checks. This creates a continuous feedback process for enumerators, and improves their accountability. Further, by making the process more transparent, it boosts motivation of the enumerators.

Monitoring: Review completed forms

Reviewing completed survey forms is another crucial part of improving data quality. The supervisors, enumerators, or even data quality checkers can conduct the form review. Follow these guidelines for this step:

- SurveyCTO forms: If the survey form was programmed in SurveyCTO, the "Go to prompt" option can list all the questions of the form on a tablet.

- Prioritize: Do not review all questions. Identify and prioritize key questions. For example, questions that determine the number of repeat groups, like household size, number of plots, number of crops, and so on.

- Structure: In this step, also review the structure of the form. Often, key questions can be found within nested repeat groups. For example, crop sales for a plot in a particular season of the year. If the form structure is too complex, it becomes hard for supervisors to locate key questions, so flag these concerns during this step.

iefieldkit: DIME Analytics has also created the Stata package,iefieldkit. This package allows members of the field team who do not specialize in code tools to understand and review the various tasks involved in data management and data cleaning. The following two commands iniefieldkitare specifically designed to field teams to test for, and resolve duplicate entries in the dataset:ieduplicates: Identifies duplicate values.iecompdup: Resolves duplicate values.

Note: Duplicate values can bias data quality checks like back checks and high frequency checks (HFCs). Therefore, resolve duplicate values before the survey forms are sent for these checks.

Minimizing Attrition

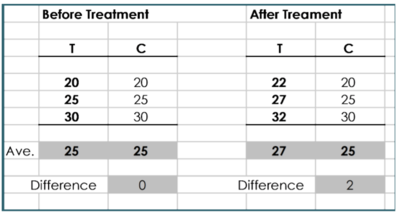

Attrition occurs when some study participants leave in the middle of an impact evaluation. Attrition rate is the percentage of participants who leave the study. Impact evaluations often involve data analysis based on both, baseline(first round) and follow-up (second round) surveys. In general, if more than 5% of the participants leave, it can have a negative impact on data quality. Further, if attrition rates differ across the treatment and control groups, it can bias the data in follow-up rounds of data collection. Figure 3 below explains this with the help of a numerical example.

The following table highlights some of the reasons for attrition, and the steps that the field team can take to resolve this problem:

| Cause | Solution |

|---|---|

| Respondent moved away from the location of the study | Make a plan in advance for adding respondents to the sample. Include the cost for this in the budget. |

| Respondent cannot be found | Record as much identifying information as possible during the baseline (first round) survey - address, phone number, etc.

Use GPS coordinates to find their location as per the baseline data. Ask nearby respondents and neighbors if they know the person. |

| Respondent refuses to participate | Provide an informed consent form to assure respondent that all information will be confidential.

Discuss and use other cooperation techniques, such as providing multiple time slots to the respondent, and adjusting according to their convenience. Consider other incentives, such as gift cards, or sharing information about possible benefits from participation. |

| In some cases, the respondent with the incorrect ID is interviewed | Ensure that all IDs match, based on the baseline data.

Load the IDs in advance, and code the questionnaire to display a pop-up message. For example: "You are about to interview XYZ. Is this correct? Yes/No. If this is not correct, please go back and select the correct ID." |

High Frequency Checks (HFCs)

Before starting with data collection, the research team should work with the field team to design and code high frequency checks, as part of the data quality assurance plan. The best time to design and code these high frequency checks is in parallel to the process of questionnaire design and programming. These checks should be run daily, to provide real-time information to the field team and research team for all surveys. They include the following types of checks, depending on the source of error:

- Response quality checks.

- Enumerator checks.

- Programming checks.

- Duplicates and survey log checks.

- Other checks specific to the project or type of data.

Back Checks

Back checks allow the field team to verify the correctness and quality of survey data. In a back check survey, an experienced enumerator visits the same respondent around 10-15 minutes after the actual interview, and asks selected questions again. The answers from this back check survey are then compared with answers from the original survey. Keep the following things in mind about back checks:

- Methodology. Make sure every enumerator and every field team is back-checked as soon as possible. As a general rule, back-check 10-20% of the sample, with 20% of the back checks in the first two weeks of fieldwork.

- Selection of respondents. Use a random sub-sample, and select the sub-sample in advance if possible. For instance, on the basis of data from a survey pilot, or a baseline (first round) survey. If some enumerators are suspected of cheating, back-check their interviews too. If other quality tests identified errors in some observations, back-check these observations to resolve these errors.

- Categorize. Divide the questions for the back check survey into the following different categories for the back checks:

- Straightforward questions where there is very little possibility of error. For example, age and education. If there is an error in these variables, it means there is a serious problem with enumerator, or with the questions.

- Sensitive or calculation-based questions where a more experienced enumerator will get the correct answer. For example, questions based on sensitive topics, or questions that involve calculations. If there is an error in these variables, there might be a need to provide further training for enumerators.

- Specific questions about the survey instrument. Errors in this case provide feedback which can help improve the survey instrument itself.

- Resources.

bcstatsis a Stata package by J-PAL and IPA that automatically compares data from back check surveys and the main survey. It also provides an outline for analysis of this data.

Data Quality Checks for Remote Surveys

In the case of remote surveys, monitoring data quality becomes even more important. Poor quality data in remote surveys can at best reduce the effectiveness of a policy intervention, and at worst require a repeat of the entire process of data collection. Given below are some possible steps to conduct data quality checks for remote surveys.

Random audio audits

Random audio audits consist of recording random portions of an interview. They allow the research team to assess the following for a CAPI or phone survey:

- Status: That is, if the interview actually took place.

- Enumerator behaviour: That is, if the enumerator introduced themselves properly, if they read out the informed consent, and so on.

- Accuracy of collected data: That is, if the responses match what was entered by the enumerator.

Especially in the case of phone surveys (CATI), the research team can use random audio audits as the main tool for data quality assurance.

Phone-based back checks

Back Checks involve selecting a small sample of questions from the original survey, and then asking them at random for a few members of the sample. The research team then compares these answers with the original answers to identify gaps in enumerator training and detect enumerator fraud. For phone surveys, the research team can perform phone-based back checks along with random audio audits to ensure proper data quality. However, these may suffer from low response rates, and there might be a selection bias, since only those respondents who have a phone can be back-checked.

Related Pages

Click here for pages that link to this topic.

Additional Resources

- DIME Analytics (World Bank) Data Quality Checks

- DIME Analytics (World Bank), Assuring Data Quality

- DIME Analytics (World Bank), Survey Quality Part 1

- DIME Analytics (World Bank), Survey Quality Part 2

- Innovation for Poverty Action, Template for high frequency checks

- IPA-JPAL-SurveyCTO, Collecting High Quality Data

- SurveyCTO, Data quality with SurveyCTO

- SurveyCTO, Guide to adding audio audits

- SurveyCTO, Monitoring and Visualization