Difference between revisions of "Regression Discontinuity"

| (37 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

Regression Discontinuity | Regression Discontinuity Design (RDD) is a [[Quasi-Experimental Methods | quasi-experimental]] impact evaluation method used to evaluate programs that have a cutoff point determining who is eligible to participate. RDD allows researchers to compare the people immediately above and below the cutoff point to identify the impact of the program on a given outcome. This page will cover when to use RDD, sharp vs. fuzzy design, how to interpret results, and methods of treatment effect estimation. | ||

==Read First== | |||

*A RDD requires a continuous eligibility score on which the population of interest is ranked and a clearly defined cutoff point above or below which the population is determined eligible for a program. | |||

*The eligibility index must be continuous around the cutoff point and the population of interest around the cutoff point must be very similar in observable and unobservable characteristics. | |||

* RDD estimates local average treatment effects around the cutoff point, where treatment and comparison units are most similar. It provides useful evidence on whether a program should be cut or expanded at the margin. However, it does not answer whether a program should exist or not: in this case, the average treatment effect provides better evidence than the local average treatment effect. | |||

==Overview== | |||

RDD is a key method in the toolkit of any applied researcher interested in unveiling the causal effects of policies. The method was first used in 1960 by Thistlethwaite and Campbell, who were interested in identifying the causal impacts of merit awards, assigned based on observed test scores, on future academic outcomes ([https://www.princeton.edu/~davidlee/wp/RDDEconomics.pdf Lee and Lemieux, 2010]). The use of RDD has increased exponentially in the last few years. Researchers have used it to evaluate electoral accountability; SME policies; social protection programs such as conditional cash transfers; and educational programs such as school grants. | |||

In RDD, assignment of treatment and control is not random, but rather based on some clear-cut threshold (or cutoff point) of an observed variable such as age, income, and score. Causal inference is then made comparing individuals on both sides of the cutoff point. | |||

== | ==Conditions and Assumptions== | ||

===Conditions=== | |||

=== | |||

Two main conditions are needed in order to apply a regression discontinuity design: | |||

#A continuous eligibility index: a continuous measure on which the population of interest is ranked (i.e. test score, poverty score, age). | |||

#A clearly defined cutoff point: a point on the index above or below which the population is determined to be eligible for the program. For example, students with a test score of at least 80 of 100 might be eligible for a scholarship, households with a poverty score less than 60 out of 100 might be eligible for food stamps, and individuals age 67 and older might be eligible for pension. The cutoff points in these examples are 80, 60, and 67, respectively. The cutoff point may also be referred to as the threshold. | |||

=== | ===Assumptions=== | ||

#The eligibility index should be continuous around the cutoff point. There should be no jumps in the eligibility index at the cutoff point or any other sign of individuals manipulating their eligibility index in order to increase their chances of being included in or excluded from the program. The McCrary Density Test tests this assumption by checking eligibility index density function for discontinuities around the cutoff point. | |||

#Individuals close to the cutoff point should be very similar, on average, in observed and unobserved characteristics. In the RD framework, this means that the distribution of the observed and unobserved variables should be continuous around the threshold. Even though researchers can check similarity between observed covariates, the similarity between unobserved characteristics has to be assumed. This is considered a plausible assumption to make for individuals very close to the cutoff point, that is, for a relatively narrow window. | |||

==Fuzzy vs. Sharp Design== | |||

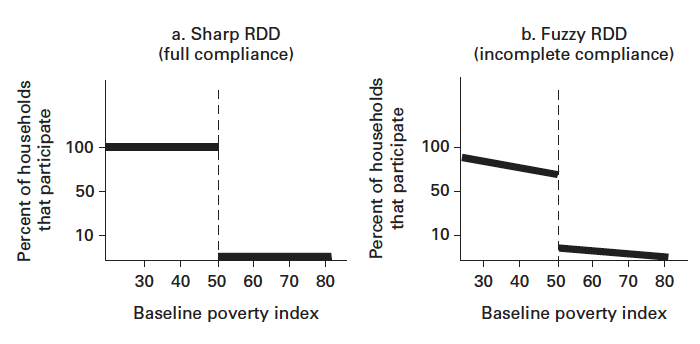

The assignment rule indicates how people are assigned or selected into the program. In practice, the assignment rule can be deterministic or probabilistic (see [https://onlinelibrary.wiley.com/doi/abs/10.1111/1468-0262.00183 Hahn et al., 2001]). If deterministic, the regression discontinuity takes a sharp design; if probabilistic, the regression discontinuity takes a fuzzy design. | |||

[[File:RD_sharp_fuzzy.png|upright=2.5]] | |||

===Sharp RDD=== | |||

In sharp designs, the probability of treatment changes from 0 to 1 at the cutoff. There are no cross-overs and no no-shows. For example, if a scholarship award is given to all students above a threshold test score of 80%, then the assignment rule defines treatment status deterministically with probabilities of 0 or 1. Thus, the design is sharp. | |||

===Fuzzy RDD=== | |||

In fuzzy designs, the probability of treatment is discontinuous at the cutoff, but not to the degree of a definitive 0 to 1 jump. For example, if food stamp eligibility is given to all households below a certain income, but not all households receive the food stamps, then the assignment rule defines treatment status probabilistically but not perfectly. Thus, the design is fuzzy. The fuzziness may result from imperfect compliance with the law/rule/program; imperfect implementation that treated some non-eligible units or neglected to treat some eligible units; spillover effects; or manipulation of the eligibility index. | |||

A fuzzy design assumes that, in the absence of the assignment rule, some of those who take up the treatment would not have participated in the program. The eligibility index acts as a nudge. The subgroup that participates in a program due to the selection rule is called ''compliers'' (see e.g. [https://pdfs.semanticscholar.org/8714/260129e51abb09fd89d6ff79065af17bb106.pdf Angrist and Imbens (1994)], and [http://www.math.mcgill.ca/dstephens/AngristIV1996-JASA-Combined.pdf Imbens, Angrist, and Rubin (1996)]); under the RDD, the treatment effects are estimated only for the group of compliers. The estimates of the causal effect under the fuzzy design require more assumptions than under the sharp design, but are weaker than any IV approach. | |||

==How to Interpret== | |||

RDD estimates local average treatment effects around the cutoff point, where treatment and comparison units are most similar. The units to the left and right of the cutoff look more and more similar as they near the cutoff. Given that the design meets all assumptions and conditions outlined above, the units directly to the left and right of the cutoff point should be so similar that they lay the groundwork for a comparison as well as does randomized assignment of the treatment. | |||

Because the RDD estimates the local average treatment effects around the cutoff point, or locally, the estimate does not necessarily apply to units with scores further away from the cutoff point. These units may not be as similar to each other as the eligible and ineligible units close to the cutoff. RDD’s inability to compute an average treatment effect for all program participants is both a strength and a limitation, depending on the question of interest. If the evaluation primarily seeks to answer whether the program should exist or not, then the RDD will not provide a sufficient answer: the average treatment effect for the entire eligible population would be the most relevant parameter in this case. However, if the policy question of interest is whether the program should be cut or expanded at the margin, then the RDD produces precisely the local estimate of interest to inform this important policy decision. | |||

Note that the most recent advances in the RDD literature suggest that it is not very accurate to interpret a discontinuity design as a local experiment. To be considered as good as a local experiment for the units close enough to the cutoff point, one must use a very narrow bandwidth and drop the assignment variable (or a function of it) from the regression equation. For more details on this point see [http://faculty.chicagobooth.edu/max.farrell/research/Calonico-Cattaneo-Farrell-Titiunik_2018_RESTAT.pdf Cattaneo et al. (2018)]. | |||

==Treatment Effect Estimation== | |||

===Parametric Methods=== | |||

The estimation of the treatment effects can be performed parametrically as follows: | |||

<math>y_i=\alpha+\delta X_i+h(Z_i)+\varepsilon_i</math> | |||

where <math>y_i</math> is the outcome of interest of individual i, <math>X_i</math> is an indicator function that takes the value of 1 for individuals assigned to the treatment and 0 otherwise, <math>Z_i</math> is the assignment variable that defines an observable clear cutoff point, and <math>h(Z_i )</math> is a flexible function in <math>Z</math>. The identification strategy hinges on the exogeneity of<math> Z</math> at the threshold. It is standard to center the assignment variable at the cutoff point. In this case, one would use <math>h(Z_i-Z_0 )</math> instead with <math>Z_0</math> being the cutoff. With that assumption, the parameter of interest, <math>\delta</math>, provides the treatment effect estimate. | |||

In the case of a sharp design with perfect compliance, the parameter <math>\delta</math> identifies the average treatment effect on the treated (ATT). In the case of a fuzzy design, <math>\delta</math> corresponds to the intent-to-treat effects – i.e. the effect of the eligibility rather than the treatment itself on the outcomes of interest. The LATE can be estimated using an IV approach. This could be done as follows: | |||

First stage: <math>P_i =\alpha+\delta X_i+h(Z_i)+\varepsilon_i</math> | |||

Second stage: <math>y_i=\mu+\delta\hat{P_i}+h(Z_i)+u_i</math>, | |||

where <math>P_i</math> is a dummy variable that identifies actual participation of individual i in the program/intervention. Notice that with a parametric specification, the researcher should specify <math>h(Z_i )</math> the same way in both regressions ([http://faculty.smu.edu/millimet/classes/eco7377/papers/imbens%20lemieux.pdf Imbens and Lemieux, 2008]). | |||

Despite the natural appeal of parametric method such as the one just outlined, this method has some direct practical implications. First, the right functional form of <math>h(Z_i )</math> is never known. Researchers are thus encouraged to fit the model with different specifications of <math>h(Z_i )</math> ([https://www.princeton.edu/~davidlee/wp/RDDEconomics.pdf Lee and Lemieux, 2010]), particularly when they must consider data farther away from the cutoff point to have enough statistical power. | |||

Although some authors test the sensitivity of results using high order polynomials, there is some recent discussion arguing against the use of high order polynomials given that they assign too much weight to observations away of the cutoff point ([https://www.nber.org/papers/w20405.pdf Imbens and Gelman, 2014]). | |||

===Non-Parametric Methods=== | |||

Another way of estimating treatment effects with RDD is via non-parametric methods. In fact, the use of non-parametric methods has been growing in the last few years to both estimate treatment effects and check robustness of estimates obtained parametrically. This might be partially explained by the increasing number of available Stata commands, but perhaps more importantly, by some attractive properties of the method compared to parametric ones (see i.e. [https://www.nber.org/papers/w20405.pdf Imbens and Gelman (2014)] for this point). In particular, non-parametric methods provide estimates based on data closer to the cut-off, reducing bias that may otherwise result from using data farther away from the cutoff to estimate local treatment effects. | |||

The use of non-parametric methods does not come without costs: the researcher must make an array of decisions about the kernel function, the algorithm for selecting optimal bandwidth size, and the specifications. [https://www.nber.org/papers/w20405.pdf Imbens and Gelman (2014)] suggest the use of local linear and at most local quadratic polynomials. | |||

====Bandwidth size==== | |||

In practice, the bandwidth size depends on data availability. Ideally, one would like to have enough sample to run the regressions using information very close to the cutoff. The main advantage of using a very narrow bandwidth is that the functional form h(Z_i ) becomes much less of a worry and treatment effects can be obtained with parametric regression using a linear or piecewise linear specification of the assignment variable (see [https://www.princeton.edu/~davidlee/wp/RDDEconomics.pdf Lee and Lemieux (2010)] for this point). However, [https://files.eric.ed.gov/fulltext/ED511782.pdf Schochet (2008)] points out two diadvantages to a narrow versus wider bandwidth: | |||

#For a given sample size, a narrower bandwidth could yield less precise estimates if the outcome-score relationship can be correctly modelled using a wider range of scores. | |||

#External validity: extrapolating results to units further away from the threshold using the estimated parametric regression lines may be more defensible if you have a wider range of scores to fit these lines over. | |||

===Placebo Tests=== | |||

Falsification (or placebo) tests are really important when using RDD as identification strategy. The researcher needs to convince the reader (and referees!) that the discontinuity exploited to inform causal impacts of an intervention was very much likely caused by the assignment rule to the intervention. In practice, researchers use fake cutoffs or different cohorts to run those tests. Examples can be seen [https://www.princeton.edu/~davidlee/wp/RDrand.pdf here], [http://onlinelibrary.wiley.com/doi/10.1111/rssa.12003/abstract here], and [https://www.cambridge.org/core/services/aop-cambridge-core/content/view/192AB48618B0E0450C93E97BE8321218/S0003055416000253a.pdf/deliberate_disengagement_how_education_can_decrease_political_participation_in_electoral_authoritarian_regimes.pdf here]. | |||

== Back to Parent == | == Back to Parent == | ||

This article is part of the topic [[ | This article is part of the topic [[Quasi-Experimental Methods]] | ||

== Additional Resources == | == Additional Resources == | ||

* | *For more information on Stata commands for RDD, please visit this [https://sites.google.com/site/matiasdcattaneo/software page]. | ||

*For those interested in some fresh discussion on power calculation for RDD, please see the World Bank blogs [http://blogs.worldbank.org/impactevaluations/power-calculations-regression-discontinuity-evaluations-part-1 here], [http://blogs.worldbank.org/impactevaluations/power-calculations-regression-discontinuity-evaluations-part-2 here] and [http://blogs.worldbank.org/impactevaluations/power-calculations-regression-discontinuity-evaluations-part-3 here]. | |||

*See examples and visualizations of RDD application in David Evan’s [https://blogs.worldbank.org/impactevaluations/regression-discontinuity-porn blog post]. | |||

* For those interested in knowing more RDD and its recent ramifications, check this practical introduction [http://www-personal.umich.edu/~cattaneo/books/Cattaneo-Idrobo-Titiunik_2017_Cambridge.pdf here]. For more advanced content, check this [http://www.emeraldinsight.com/doi/book/10.1108/S0731-9053201738 e-book]. | |||

*Owen Ozier’s World Bank [http://pubdocs.worldbank.org/en/663221440083705317/08-Regression-Discontinuity-Owen-Ozier.pdf Regression Discontinuity training] | |||

*Lee and Thomas’ [http://www.princeton.edu/~davidlee/wp/RDDEconomics.pdf Regression discontinuity designs in economics] | |||

*Northwestern's [https://www.ipr.northwestern.edu/workshops/past-workshops/quasi-experimental-design-and-analysis-in-education/quasi-experiments/docs/QE-Day2.pdf Regression Discontinuity] slides | |||

*[https://www.chime.ucla.edu/seminars/RCMAR_Wherry.pdf An Introduction to Regression Discontinuity Design] by Laura Wherry | |||

* Pischke’s [http://econ.lse.ac.uk/staff/spischke/ec533/RD.pdf Regression Discontinuity Design] slides | |||

* Yamamoto’s [http://web.mit.edu/teppei/www/teaching/Keio2016/05rd.pdf Regression Discontinuity Design] slides | |||

[[Category: | [[Category: Quasi-Experimental Methods]] | ||

Latest revision as of 15:45, 28 May 2019

Regression Discontinuity Design (RDD) is a quasi-experimental impact evaluation method used to evaluate programs that have a cutoff point determining who is eligible to participate. RDD allows researchers to compare the people immediately above and below the cutoff point to identify the impact of the program on a given outcome. This page will cover when to use RDD, sharp vs. fuzzy design, how to interpret results, and methods of treatment effect estimation.

Read First

- A RDD requires a continuous eligibility score on which the population of interest is ranked and a clearly defined cutoff point above or below which the population is determined eligible for a program.

- The eligibility index must be continuous around the cutoff point and the population of interest around the cutoff point must be very similar in observable and unobservable characteristics.

- RDD estimates local average treatment effects around the cutoff point, where treatment and comparison units are most similar. It provides useful evidence on whether a program should be cut or expanded at the margin. However, it does not answer whether a program should exist or not: in this case, the average treatment effect provides better evidence than the local average treatment effect.

Overview

RDD is a key method in the toolkit of any applied researcher interested in unveiling the causal effects of policies. The method was first used in 1960 by Thistlethwaite and Campbell, who were interested in identifying the causal impacts of merit awards, assigned based on observed test scores, on future academic outcomes (Lee and Lemieux, 2010). The use of RDD has increased exponentially in the last few years. Researchers have used it to evaluate electoral accountability; SME policies; social protection programs such as conditional cash transfers; and educational programs such as school grants.

In RDD, assignment of treatment and control is not random, but rather based on some clear-cut threshold (or cutoff point) of an observed variable such as age, income, and score. Causal inference is then made comparing individuals on both sides of the cutoff point.

Conditions and Assumptions

Conditions

Two main conditions are needed in order to apply a regression discontinuity design:

- A continuous eligibility index: a continuous measure on which the population of interest is ranked (i.e. test score, poverty score, age).

- A clearly defined cutoff point: a point on the index above or below which the population is determined to be eligible for the program. For example, students with a test score of at least 80 of 100 might be eligible for a scholarship, households with a poverty score less than 60 out of 100 might be eligible for food stamps, and individuals age 67 and older might be eligible for pension. The cutoff points in these examples are 80, 60, and 67, respectively. The cutoff point may also be referred to as the threshold.

Assumptions

- The eligibility index should be continuous around the cutoff point. There should be no jumps in the eligibility index at the cutoff point or any other sign of individuals manipulating their eligibility index in order to increase their chances of being included in or excluded from the program. The McCrary Density Test tests this assumption by checking eligibility index density function for discontinuities around the cutoff point.

- Individuals close to the cutoff point should be very similar, on average, in observed and unobserved characteristics. In the RD framework, this means that the distribution of the observed and unobserved variables should be continuous around the threshold. Even though researchers can check similarity between observed covariates, the similarity between unobserved characteristics has to be assumed. This is considered a plausible assumption to make for individuals very close to the cutoff point, that is, for a relatively narrow window.

Fuzzy vs. Sharp Design

The assignment rule indicates how people are assigned or selected into the program. In practice, the assignment rule can be deterministic or probabilistic (see Hahn et al., 2001). If deterministic, the regression discontinuity takes a sharp design; if probabilistic, the regression discontinuity takes a fuzzy design.

Sharp RDD

In sharp designs, the probability of treatment changes from 0 to 1 at the cutoff. There are no cross-overs and no no-shows. For example, if a scholarship award is given to all students above a threshold test score of 80%, then the assignment rule defines treatment status deterministically with probabilities of 0 or 1. Thus, the design is sharp.

Fuzzy RDD

In fuzzy designs, the probability of treatment is discontinuous at the cutoff, but not to the degree of a definitive 0 to 1 jump. For example, if food stamp eligibility is given to all households below a certain income, but not all households receive the food stamps, then the assignment rule defines treatment status probabilistically but not perfectly. Thus, the design is fuzzy. The fuzziness may result from imperfect compliance with the law/rule/program; imperfect implementation that treated some non-eligible units or neglected to treat some eligible units; spillover effects; or manipulation of the eligibility index.

A fuzzy design assumes that, in the absence of the assignment rule, some of those who take up the treatment would not have participated in the program. The eligibility index acts as a nudge. The subgroup that participates in a program due to the selection rule is called compliers (see e.g. Angrist and Imbens (1994), and Imbens, Angrist, and Rubin (1996)); under the RDD, the treatment effects are estimated only for the group of compliers. The estimates of the causal effect under the fuzzy design require more assumptions than under the sharp design, but are weaker than any IV approach.

How to Interpret

RDD estimates local average treatment effects around the cutoff point, where treatment and comparison units are most similar. The units to the left and right of the cutoff look more and more similar as they near the cutoff. Given that the design meets all assumptions and conditions outlined above, the units directly to the left and right of the cutoff point should be so similar that they lay the groundwork for a comparison as well as does randomized assignment of the treatment.

Because the RDD estimates the local average treatment effects around the cutoff point, or locally, the estimate does not necessarily apply to units with scores further away from the cutoff point. These units may not be as similar to each other as the eligible and ineligible units close to the cutoff. RDD’s inability to compute an average treatment effect for all program participants is both a strength and a limitation, depending on the question of interest. If the evaluation primarily seeks to answer whether the program should exist or not, then the RDD will not provide a sufficient answer: the average treatment effect for the entire eligible population would be the most relevant parameter in this case. However, if the policy question of interest is whether the program should be cut or expanded at the margin, then the RDD produces precisely the local estimate of interest to inform this important policy decision.

Note that the most recent advances in the RDD literature suggest that it is not very accurate to interpret a discontinuity design as a local experiment. To be considered as good as a local experiment for the units close enough to the cutoff point, one must use a very narrow bandwidth and drop the assignment variable (or a function of it) from the regression equation. For more details on this point see Cattaneo et al. (2018).

Treatment Effect Estimation

Parametric Methods

The estimation of the treatment effects can be performed parametrically as follows:

where is the outcome of interest of individual i, is an indicator function that takes the value of 1 for individuals assigned to the treatment and 0 otherwise, is the assignment variable that defines an observable clear cutoff point, and is a flexible function in . The identification strategy hinges on the exogeneity of at the threshold. It is standard to center the assignment variable at the cutoff point. In this case, one would use instead with being the cutoff. With that assumption, the parameter of interest, , provides the treatment effect estimate.

In the case of a sharp design with perfect compliance, the parameter identifies the average treatment effect on the treated (ATT). In the case of a fuzzy design, corresponds to the intent-to-treat effects – i.e. the effect of the eligibility rather than the treatment itself on the outcomes of interest. The LATE can be estimated using an IV approach. This could be done as follows:

First stage:

Second stage: ,

where is a dummy variable that identifies actual participation of individual i in the program/intervention. Notice that with a parametric specification, the researcher should specify the same way in both regressions (Imbens and Lemieux, 2008).

Despite the natural appeal of parametric method such as the one just outlined, this method has some direct practical implications. First, the right functional form of is never known. Researchers are thus encouraged to fit the model with different specifications of (Lee and Lemieux, 2010), particularly when they must consider data farther away from the cutoff point to have enough statistical power.

Although some authors test the sensitivity of results using high order polynomials, there is some recent discussion arguing against the use of high order polynomials given that they assign too much weight to observations away of the cutoff point (Imbens and Gelman, 2014).

Non-Parametric Methods

Another way of estimating treatment effects with RDD is via non-parametric methods. In fact, the use of non-parametric methods has been growing in the last few years to both estimate treatment effects and check robustness of estimates obtained parametrically. This might be partially explained by the increasing number of available Stata commands, but perhaps more importantly, by some attractive properties of the method compared to parametric ones (see i.e. Imbens and Gelman (2014) for this point). In particular, non-parametric methods provide estimates based on data closer to the cut-off, reducing bias that may otherwise result from using data farther away from the cutoff to estimate local treatment effects.

The use of non-parametric methods does not come without costs: the researcher must make an array of decisions about the kernel function, the algorithm for selecting optimal bandwidth size, and the specifications. Imbens and Gelman (2014) suggest the use of local linear and at most local quadratic polynomials.

Bandwidth size

In practice, the bandwidth size depends on data availability. Ideally, one would like to have enough sample to run the regressions using information very close to the cutoff. The main advantage of using a very narrow bandwidth is that the functional form h(Z_i ) becomes much less of a worry and treatment effects can be obtained with parametric regression using a linear or piecewise linear specification of the assignment variable (see Lee and Lemieux (2010) for this point). However, Schochet (2008) points out two diadvantages to a narrow versus wider bandwidth:

- For a given sample size, a narrower bandwidth could yield less precise estimates if the outcome-score relationship can be correctly modelled using a wider range of scores.

- External validity: extrapolating results to units further away from the threshold using the estimated parametric regression lines may be more defensible if you have a wider range of scores to fit these lines over.

Placebo Tests

Falsification (or placebo) tests are really important when using RDD as identification strategy. The researcher needs to convince the reader (and referees!) that the discontinuity exploited to inform causal impacts of an intervention was very much likely caused by the assignment rule to the intervention. In practice, researchers use fake cutoffs or different cohorts to run those tests. Examples can be seen here, here, and here.

Back to Parent

This article is part of the topic Quasi-Experimental Methods

Additional Resources

- For more information on Stata commands for RDD, please visit this page.

- For those interested in some fresh discussion on power calculation for RDD, please see the World Bank blogs here, here and here.

- See examples and visualizations of RDD application in David Evan’s blog post.

- For those interested in knowing more RDD and its recent ramifications, check this practical introduction here. For more advanced content, check this e-book.

- Owen Ozier’s World Bank Regression Discontinuity training

- Lee and Thomas’ Regression discontinuity designs in economics

- Northwestern's Regression Discontinuity slides

- An Introduction to Regression Discontinuity Design by Laura Wherry

- Pischke’s Regression Discontinuity Design slides

- Yamamoto’s Regression Discontinuity Design slides