Difference between revisions of "Ieduplicates"

Kbjarkefur (talk | contribs) |

|||

| (17 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<code>ieduplicates</code> is | <code>ieduplicates</code> is the second command in the [[Stata Coding Practices|Stata]] package created by [https://www.worldbank.org/en/research/dime/data-and-analytics DIME Analytics], <code>[[iefieldkit]]</code>. <code>ieduplicates</code> identifies [[Duplicates and Survey Logs | duplicate values]] in [[ID Variable Properties|ID variables]]. '''ID variables''' are those that uniquely identify every [[Unit of Observation|observation]] in a [[Master Dataset|dataset]], for example, ''household_id''. It then [[Exporting Analysis|exports]] them to an Excel file that the [[Impact Evaluation Team|research team]] can use to resolve these '''duplicates'''. The '''research team''' should run <code>ieduplicates</code> with each new batch of incoming data to ensure [[Monitoring Data Quality|high quality data]] before [[Data Cleaning | cleaning]] and [[Data Analysis | analysis]]. | ||

==Read First== | ==Read First== | ||

* [[Stata Coding Practices|Stata coding practices]]. | * Please refer to [[Stata Coding Practices|Stata coding practices]] for coding best practices in '''Stata'''. | ||

* <code>[[iefieldkit]]</code>. | * <code>ieduplicates</code> is part of the package <code>[[iefieldkit]]</code>, which has been developed by [https://www.worldbank.org/en/research/dime/data-and-analytics DIME Analytics]. | ||

* <code>ieduplicates</code> identifies duplicates in [[ID Variable Properties|ID variables]], and then <code>[[iecompdup]]</code> resolves these issues. | * <code>ieduplicates</code> identifies duplicates in [[ID Variable Properties|ID variables]], and then <code>[[iecompdup]]</code> resolves these issues. | ||

* To install <code>ieduplicates</code>, | * To install <code>ieduplicates</code>, as well as other commands in the <code>iefieldkit</code> package, type <syntaxhighlight lang="Stata" inline>ssc install iefieldkit</syntaxhighlight> in '''Stata''', as this command is a part of the <code>iefieldkit</code> package. | ||

* For instructions and available options, type <syntaxhighlight lang="Stata" inline>help ieduplicates</syntaxhighlight>. | |||

* For instructions and available options, type < | |||

== Overview == | == Overview == | ||

The <code>ieduplicates</code> and <code>[[iecompdup]]</code> commands are meant to help [[Impact Evaluation Team|research teams]] deal with [[Duplicates and Survey Logs|duplicate observations]] in [[Primary Data Collection|primary data]]. These commands are designed to identify and resolve duplicate instances of an [[ID Variable Properties|ID variable]] in raw survey data, and ensure that each | The <code>ieduplicates</code> and <code>[[iecompdup]]</code> commands are meant to help [[Impact Evaluation Team|research teams]] deal with [[Duplicates and Survey Logs|duplicate observations]] in [[Primary Data Collection|primary data]]. These commands are designed to identify and resolve '''duplicate''' instances of an [[ID Variable Properties|ID variable]] in raw [[Survey Pilot|survey]] data, and ensure that each observation is [[ID Variable Properties#Property 1: Uniquely Identifying|uniquely]] and | ||

[[ID Variable Properties#Property 2: Fully Identifying|fully identified]]. The commands combine four key tasks to resolve duplicate values: | [[ID Variable Properties#Property 2: Fully Identifying|fully identified]]. The commands combine four key tasks to resolve duplicate values: | ||

# ''' | #Identifying '''duplicate''' entries | ||

# ''' | # Comparing observations with the same '''ID value''' | ||

# ''' | #Tracking and [[Data Documentation|documenting]] changes to the '''ID variable''' | ||

# ''' | #Applying the necessary corrections to the data | ||

In any [[Master Dataset|dataset]], certain '''variables''' should be unique on purpose, to allow the '''research team''' to identify them during further [[Data Analysis|analysis]]. For example, suppose you select '''household_id''' as the '''unique ID variable '''. Now suppose you pick the observation with ''household_id''= "123456" for [[Back Checks|back checks]], but the '''dataset ''' has two observations with ''household_id'' = "123456". In this case, it is important to resolve these '''duplicate observations ''' before performing the '''back check'''. | |||

When you run <code>ieduplicates</code> for the first time, it will create a '''duplicates correction template '''. This template will list all observations that contain '''duplicate values ''' of an '''ID variable''' that should be unique. In the example above, after creating this template, <code>ieduplicates</code> will, by default, display a message pointing out that ''household_id'' does not uniquely and fully identify the data. It will also stop your code, and require you to fill the correction template before you can move on. | |||

== Syntax == | == Syntax == | ||

The basic syntax for <code>ieduplicates</code> is as follows: | The basic syntax for <code>ieduplicates</code> is as follows: | ||

<syntaxhighlight lang="Stata">ieduplicates id_varname using "filename.xlsx" | |||

, uniquevars( | , uniquevars(varlist)</syntaxhighlight> | ||

As inputs, <code>ieduplicates</code> requires the following : | As inputs, <code>ieduplicates</code> requires the following : | ||

* ''id_varname'': This is the name of the single, unique [[ID Variable Properties|ID variable]]. This '''variable ''' must be such that it would be an unacceptable [[Duplicates and Survey Logs|duplicate]] in the [[Master Dataset|dataset]], and so must never be repeated. If there are two or more '''variables ''' that identify the observation in the '''dataset ''', you should create a single '''ID variable''' that is unique for the '''dataset '''. This '''variable ''' could be either a string or a number. For example, ''household_id''. | |||

* ''filename.xlsx'': This provides the name of the Excel file in which <code>ieduplicates</code> will display the '''duplicates correction template'''. The file name in this case is specified with the help of <code>using</code>, and must include an [[Stata Coding Practices#File Paths|absolute file path]]. For example, ''C:/myIE/Documentation/DupReport.xlsx'' is the absolute file path for the file called ''DupReport.xlsx''. Since the output is an Excel sheet, even those members of the research team who do not know [[Stata Coding Practices|Stata]] can read the report, and make corrections. | |||

* <code>uniquevars()</code>: Finally, <code>ieduplicates</code> uses one or multiple '''variables ''' specified within '''<code>()</code>''' to uniquely identify each observation in the '''dataset '''. However, most data collection tools only use one '''variable ''' for this purpose. For example, SurveyCTO creates this '''variable ''' automatically, and names it ''KEY''. | |||

For example, if there are no observations which have duplicate values of ''household_id'', for instance, <code>ieduplicates</code> will display a message saying the '''dataset''' is uniquely and fully identified on the basis of ''household_id''. In such a case, there will be no output, and this command will leave the data unchanged. | |||

However, if there are observations which have '''duplicate values ''' of ''household_id'', <code>ieduplicates</code> will save the output to an Excel sheet called ''DupReport.xlsx''. This file will contain information on these observations in the form of the '''duplicates correction template''', and <code>ieduplicates</code> will also stop your code with a message listing the repeated values under ''household_id''. See "Figure 1" below for a sample correction template. | |||

== Implementation == | == Implementation == | ||

The following is the process for using <code>ieduplicates</code>: | The following is the process for using <code>ieduplicates</code>: | ||

# '''Run''' <code>ieduplicates</code> ''' on the raw data.''' If there are no duplicate observations, then you are done. Skip the rest of the steps. If there are duplicates, the command will output an Excel file which contains the '''duplicates correction template'''. It will display a message with a link to this file, and stop the code from moving forward. It will also show a message listing the duplicate ID values. | # '''Run''' <code>ieduplicates</code> ''' on the raw data.''' If there are no [[Duplicate and Survey Logs|duplicate observations]], then you are done. Skip the rest of the steps. If there are '''duplicates ''', the command will output an Excel file which contains the '''duplicates correction template'''. It will display a message with a link to this file, and stop the code from moving forward. It will also show a message listing the '''duplicate ID values '''. | ||

# '''Open the duplicates correction template.''' This template will list each duplicate entry of the ID variable, and information about each observation. It also contains 5 blank columns - | |||

# '''Use''' <code>[[iecompdup]]</code> '''for more information.''' Sometimes the template is not enough to solve a particular issue. In such cases, run the <code>[[iecompdup]]</code> command on the same dataset. | # '''Open the duplicates correction template.''' This template will list each '''duplicate entry ''' of the '''ID variable ''', and information about each observation. It also contains 5 blank columns - ''correct'', ''drop'', ''newid'', ''initials'', and ''notes''. Use these columns to make corrections, and include comments to [[Data Documentation|document]] the corrections. | ||

# '''Overwrite the previous file.''' After entering all the corrections to the template, save the Excel file in the same location with the same name. | |||

# '''Run''' <code>ieduplicates</code> '''again.''' This will apply the corrections you made in the previous steps. Now if you use the | # '''Use''' <code>[[iecompdup]]</code> '''for more information.''' Sometimes the template is not enough to solve a particular issue. In such cases, run the <code>[[iecompdup]]</code> command on the same [[Master Dataset|dataset]]. | ||

# '''Do not overwrite the | |||

# '''Overwrite the previous file.''' After entering all the corrections to the template, save the Excel file in the same location with the same name. | |||

# '''Run''' <code>ieduplicates</code> '''again.''' This will apply the corrections you made in the previous steps. Now if you use the ''force'' option, it will only remove those '''duplicates ''' that you did not resolve. | |||

# '''Do not overwrite the original raw data.''' Save the resulting '''dataset ''' under a different [[Naming Conventions|name]]. | |||

# '''Repeat these steps with each new round of data.''' | # '''Repeat these steps with each new round of data.''' | ||

==== Sample template ==== | ==== Sample template ==== | ||

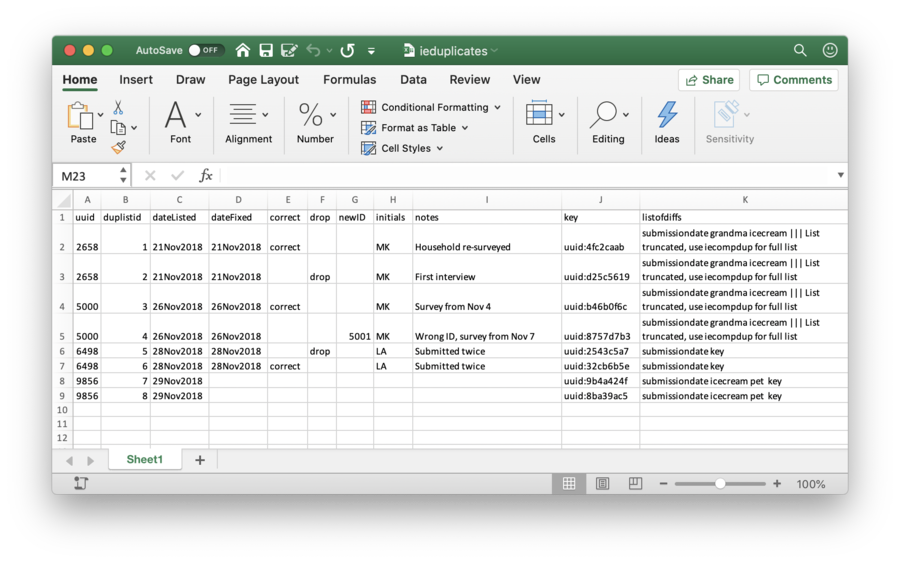

A sample '''duplicates correction template''' created by <code>ieduplicates</code> is displayed below. | A sample '''duplicates correction template''' created by <code>ieduplicates</code> is displayed below. | ||

| Line 49: | Line 57: | ||

== Duplicates Correction Template == | == Duplicates Correction Template == | ||

The <code>ieduplicates</code> exports the '''duplicates correction template''' to the Excel file, based on the following syntax: | The <code>ieduplicates</code> exports the '''duplicates correction template''' to the Excel file, based on the following syntax: | ||

* <code>force</code>: Removes all observations that contain duplicate values of | <syntaxhighlight lang="Stata">ieduplicates id_varname using "filename.xlsx" | ||

, uniquevars(varlist) | |||

[force keepvars(varlist) tostringok droprest nodaily | |||

duplistid(string) datelisted(string) datefixed(string) correct(string) | |||

drop(string) newid(string) initials(''string) notes(string) listofdiffs(string)]</syntaxhighlight> | |||

* <code>force</code>: Removes all observations that contain [[Duplicates and Survey Logs|duplicate values]] of ''id_varname'' from the data. As a result, it keeps only uniquely and fully identified observations. The <code>force</code> option is required so that you know that <code>ieduplicates</code> is making changes to your [[Master Dataset|dataset]]. Do not overwrite the original raw data with the one that this command generates, otherwise you will lose the original data. Save this new '''dataset ''' with a different name. | |||

* <code>duplistid</code>: Uniquely identifies each of the duplicate observations in the '''duplicates correction template'''. It does this by assigning | * <code>duplistid</code>: Uniquely identifies each of the '''duplicate observations''' in the '''duplicates correction template'''. It does this by assigning ''1'' to the first instance of a '''duplicate value''', ''2'' to the second instance, and so on. | ||

* <code>datelisted</code>: Indicates the date on which the observation was first included in the template. | * <code>datelisted</code>: Indicates the date on which the observation was first included in the template. | ||

* <code>listofdiffs</code>: Lists the variables in the | * <code>listofdiffs</code>: Lists the '''variables''' in the '''dataset''' that are different for the observations that have '''duplicate values''' of the '''ID variable'''. You can also rename these columns by specifying the new column name under their respective options. | ||

* <code>correct</code>, <code>drop</code>, <code>newID</code>, <code>initials</code>, and <code>notes</code>: Creates 5 blank columns which you can fill to make corrections where needed, and complete the template. | * <code>correct</code>, <code>drop</code>, <code>newID</code>, <code>initials</code>, and <code>notes</code>: Creates 5 blank columns which you can fill to make corrections where needed, and complete the template. | ||

This completed template then acts like a permanent [[Data Documentation|documentation]] of how the [[Impact Evaluation Team|research team]] resolved duplicate ID variables in the raw data. There are three options for | This completed template then acts like a permanent [[Data Documentation|documentation]] of how the [[Impact Evaluation Team|research team]] resolved '''duplicate ID variables''' in the raw data. There are three options for | ||

resolving duplicate observations. They appear in the form of | resolving '''duplicate observations'''. They appear in the form of ''correct'', ''drop'', and ''newID'' columns in the template. Consider the following examples to understand how to fill these columns: | ||

* | |||

* ''drop'': If you want to keep one of the duplicate observations and drop another, then write ''=correct'' in the ''correct'' column for the observation you want to keep, and ''drop'' in the ''drop'' column for the observation you want to drop. Make sure you mention the correct value under the ''key'' column for the observations you want to keep, and the ones that you want to drop from the dataset. This is important because SurveyCTO creates a unique ''key'' for every observation, even if two or more observations have duplicate variable IDs by mistake. | |||

* | * ''newID'': If you want to keep one of the '''duplicates''' and assign a new '''unique ID''' to another one, write ''correct'' in the ''correct'' column for the observation you want to keep, and the new corrected ID value in the ''newID'' column for the observation to which you want to assign the new '''unique ID'''. | ||

* '''Combine both methods''': You can also combine these two methods if you have more than 2 duplicate observations. Note that you must always indicate which observation you want to keep for each group of duplicate observations. | * '''Combine both methods''': You can also combine these two methods if you have more than 2 '''duplicate observations'''. Note that you must always indicate which observation you want to keep for each group of '''duplicate observations'''. | ||

After entering your corrections, save the file and run <code>ieduplicates</code> again to apply the corrections to the dataset. | After entering your corrections, save the file and run <code>ieduplicates</code> again to apply the corrections to the '''dataset'''. | ||

Since <code>ieduplicates</code> should be used frequently as new data comes in from the field, the command also manages a subfolder called | Since <code>ieduplicates</code> should be used frequently as new data comes in from the field, the command also manages a subfolder called ''/Daily/'' in the same folder which contains the main Excel file. <code>ieduplicates</code> uses this subfolder to save a backup version (along with the date) for every time the template is updated. If you do not wish to use this feature, use the ''nodaily'' option which prevents the creation of backups. | ||

'''Note:''' If two different templates are generated on the same day, it saves the second with an additional time stamp on the name. This is especially useful in case the main corrections template, or any of its contents get deleted. You can restore a backup version by simply copying it out of the | '''Note:''' If two different templates are generated on the same day, it saves the second with an additional time stamp on the name. This is especially useful in case the main corrections template, or any of its contents get deleted. You can restore a backup version by simply copying it out of the ''/Daily/'' folder and remove the date from the name. | ||

== Related Pages == | == Related Pages == | ||

Latest revision as of 20:40, 11 August 2023

ieduplicates is the second command in the Stata package created by DIME Analytics, iefieldkit. ieduplicates identifies duplicate values in ID variables. ID variables are those that uniquely identify every observation in a dataset, for example, household_id. It then exports them to an Excel file that the research team can use to resolve these duplicates. The research team should run ieduplicates with each new batch of incoming data to ensure high quality data before cleaning and analysis.

Read First

- Please refer to Stata coding practices for coding best practices in Stata.

ieduplicatesis part of the packageiefieldkit, which has been developed by DIME Analytics.ieduplicatesidentifies duplicates in ID variables, and theniecompdupresolves these issues.- To install

ieduplicates, as well as other commands in theiefieldkitpackage, typessc install iefieldkitin Stata, as this command is a part of theiefieldkitpackage. - For instructions and available options, type

help ieduplicates.

Overview

The ieduplicates and iecompdup commands are meant to help research teams deal with duplicate observations in primary data. These commands are designed to identify and resolve duplicate instances of an ID variable in raw survey data, and ensure that each observation is uniquely and

fully identified. The commands combine four key tasks to resolve duplicate values:

- Identifying duplicate entries

- Comparing observations with the same ID value

- Tracking and documenting changes to the ID variable

- Applying the necessary corrections to the data

In any dataset, certain variables should be unique on purpose, to allow the research team to identify them during further analysis. For example, suppose you select household_id as the unique ID variable . Now suppose you pick the observation with household_id= "123456" for back checks, but the dataset has two observations with household_id = "123456". In this case, it is important to resolve these duplicate observations before performing the back check.

When you run ieduplicates for the first time, it will create a duplicates correction template . This template will list all observations that contain duplicate values of an ID variable that should be unique. In the example above, after creating this template, ieduplicates will, by default, display a message pointing out that household_id does not uniquely and fully identify the data. It will also stop your code, and require you to fill the correction template before you can move on.

Syntax

The basic syntax for ieduplicates is as follows:

ieduplicates id_varname using "filename.xlsx"

, uniquevars(varlist)

As inputs, ieduplicates requires the following :

- id_varname: This is the name of the single, unique ID variable. This variable must be such that it would be an unacceptable duplicate in the dataset, and so must never be repeated. If there are two or more variables that identify the observation in the dataset , you should create a single ID variable that is unique for the dataset . This variable could be either a string or a number. For example, household_id.

- filename.xlsx: This provides the name of the Excel file in which

ieduplicateswill display the duplicates correction template. The file name in this case is specified with the help ofusing, and must include an absolute file path. For example, C:/myIE/Documentation/DupReport.xlsx is the absolute file path for the file called DupReport.xlsx. Since the output is an Excel sheet, even those members of the research team who do not know Stata can read the report, and make corrections.

uniquevars(): Finally,ieduplicatesuses one or multiple variables specified within()to uniquely identify each observation in the dataset . However, most data collection tools only use one variable for this purpose. For example, SurveyCTO creates this variable automatically, and names it KEY.

For example, if there are no observations which have duplicate values of household_id, for instance, ieduplicates will display a message saying the dataset is uniquely and fully identified on the basis of household_id. In such a case, there will be no output, and this command will leave the data unchanged.

However, if there are observations which have duplicate values of household_id, ieduplicates will save the output to an Excel sheet called DupReport.xlsx. This file will contain information on these observations in the form of the duplicates correction template, and ieduplicates will also stop your code with a message listing the repeated values under household_id. See "Figure 1" below for a sample correction template.

Implementation

The following is the process for using ieduplicates:

- Run

ieduplicateson the raw data. If there are no duplicate observations, then you are done. Skip the rest of the steps. If there are duplicates , the command will output an Excel file which contains the duplicates correction template. It will display a message with a link to this file, and stop the code from moving forward. It will also show a message listing the duplicate ID values .

- Open the duplicates correction template. This template will list each duplicate entry of the ID variable , and information about each observation. It also contains 5 blank columns - correct, drop, newid, initials, and notes. Use these columns to make corrections, and include comments to document the corrections.

- Use

iecompdupfor more information. Sometimes the template is not enough to solve a particular issue. In such cases, run theiecompdupcommand on the same dataset.

- Overwrite the previous file. After entering all the corrections to the template, save the Excel file in the same location with the same name.

- Run

ieduplicatesagain. This will apply the corrections you made in the previous steps. Now if you use the force option, it will only remove those duplicates that you did not resolve.

- Do not overwrite the original raw data. Save the resulting dataset under a different name.

- Repeat these steps with each new round of data.

Sample template

A sample duplicates correction template created by ieduplicates is displayed below.

Duplicates Correction Template

The ieduplicates exports the duplicates correction template to the Excel file, based on the following syntax:

ieduplicates id_varname using "filename.xlsx"

, uniquevars(varlist)

[force keepvars(varlist) tostringok droprest nodaily

duplistid(string) datelisted(string) datefixed(string) correct(string)

drop(string) newid(string) initials(''string) notes(string) listofdiffs(string)]

force: Removes all observations that contain duplicate values of id_varname from the data. As a result, it keeps only uniquely and fully identified observations. Theforceoption is required so that you know thatieduplicatesis making changes to your dataset. Do not overwrite the original raw data with the one that this command generates, otherwise you will lose the original data. Save this new dataset with a different name.

duplistid: Uniquely identifies each of the duplicate observations in the duplicates correction template. It does this by assigning 1 to the first instance of a duplicate value, 2 to the second instance, and so on.

datelisted: Indicates the date on which the observation was first included in the template.

listofdiffs: Lists the variables in the dataset that are different for the observations that have duplicate values of the ID variable. You can also rename these columns by specifying the new column name under their respective options.

correct,drop,newID,initials, andnotes: Creates 5 blank columns which you can fill to make corrections where needed, and complete the template.

This completed template then acts like a permanent documentation of how the research team resolved duplicate ID variables in the raw data. There are three options for resolving duplicate observations. They appear in the form of correct, drop, and newID columns in the template. Consider the following examples to understand how to fill these columns:

- drop: If you want to keep one of the duplicate observations and drop another, then write =correct in the correct column for the observation you want to keep, and drop in the drop column for the observation you want to drop. Make sure you mention the correct value under the key column for the observations you want to keep, and the ones that you want to drop from the dataset. This is important because SurveyCTO creates a unique key for every observation, even if two or more observations have duplicate variable IDs by mistake.

- newID: If you want to keep one of the duplicates and assign a new unique ID to another one, write correct in the correct column for the observation you want to keep, and the new corrected ID value in the newID column for the observation to which you want to assign the new unique ID.

- Combine both methods: You can also combine these two methods if you have more than 2 duplicate observations. Note that you must always indicate which observation you want to keep for each group of duplicate observations.

After entering your corrections, save the file and run ieduplicates again to apply the corrections to the dataset.

Since ieduplicates should be used frequently as new data comes in from the field, the command also manages a subfolder called /Daily/ in the same folder which contains the main Excel file. ieduplicates uses this subfolder to save a backup version (along with the date) for every time the template is updated. If you do not wish to use this feature, use the nodaily option which prevents the creation of backups.

Note: If two different templates are generated on the same day, it saves the second with an additional time stamp on the name. This is especially useful in case the main corrections template, or any of its contents get deleted. You can restore a backup version by simply copying it out of the /Daily/ folder and remove the date from the name.

Related Pages

Click here for pages that link to this topic.

This page is part of the topic iefieldkit. Also see iecompdup.

Additional Resources

- DIME Analytics (World Bank), Real Time Data Quality Checks

- DIME Analytics (World Bank), The

iefieldkitGitHub page